Did you know we have a monthly newsletter? View past volumes and subscribe.

Visualization software stands the test of time

Sept. 13, 2021 -

In the decades since LLNL’s founding, the technology used in pursuit of the Laboratory’s national security mission has changed over time. For example, studying scientific phenomena and predicting their behaviors require increasingly robust, high-resolution simulations. These crucial tasks compound the demands on high-performance computing hardware and software, which must continually be...

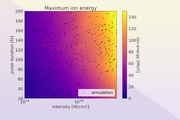

Laser-driven ion acceleration with deep learning

May 25, 2021 -

While advances in machine learning over the past decade have made significant impacts in applications such as image classification, natural language processing and pattern recognition, scientific endeavors have only just begun to leverage this technology. This is most notable in processing large quantities of data from experiments. Research conducted at LLNL is the first to apply neural...

Conference papers highlight importance of data security to machine learning

May 12, 2021 -

The 2021 Conference on Computer Vision and Pattern Recognition, the premier conference of its kind, will feature two papers co-authored by an LLNL researcher targeted at improving the understanding of robust machine learning models. Both papers include contributions from LLNL computer scientist Bhavya Kailkhura and examine the importance of data in building models, part of a Lab effort to...

A winning strategy for deep neural networks

April 29, 2021 -

LLNL continues to make an impact at top machine learning conferences, even as much of the research staff works remotely during the COVID-19 pandemic. Postdoctoral researcher James Diffenderfer and computer scientist Bhavya Kailkhura, both from LLNL’s Center for Applied Scientific Computing, are co-authors on a paper—“Multi-Prize Lottery Ticket Hypothesis: Finding Accurate Binary Neural...

Winter hackathon highlights data science talks and tutorial

March 24, 2021 -

The Data Science Institute (DSI) sponsored LLNL’s 27th hackathon on February 11–12. Held four times a year, these seasonal events bring the computing community together for a 24-hour period where anything goes: Participants can focus on special projects, learn new programming languages, develop skills, dig into challenging tasks, and more. The winter hackathon was the DSI’s second such...

Novel deep learning framework for symbolic regression

March 18, 2021 -

LLNL computer scientists have developed a new framework and an accompanying visualization tool that leverages deep reinforcement learning for symbolic regression problems, outperforming baseline methods on benchmark problems. The paper was recently accepted as an oral presentation at the International Conference on Learning Representations (ICLR 2021), one of the top machine learning...

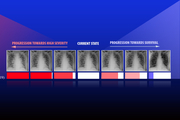

'Self-trained' deep learning to improve disease diagnosis

March 4, 2021 -

New work by computer scientists at LLNL and IBM Research on deep learning models to accurately diagnose diseases from X-ray images with less labeled data won the Best Paper award for Computer-Aided Diagnosis at the SPIE Medical Imaging Conference on February 19. The technique, which includes novel regularization and “self-training” strategies, addresses some well-known challenges in the...

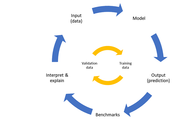

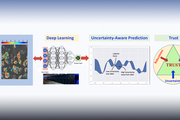

Lab researchers explore ‘learn-by-calibration’ approach to deep learning to accurately emulate scientific process

Feb. 10, 2021 -

An LLNL team has developed a “Learn-by-Calibrating” method for creating powerful scientific emulators that could be used as proxies for far more computationally intensive simulators. Researchers found the approach results in high-quality predictive models that are closer to real-world data and better calibrated than previous state-of-the-art methods. The LbC approach is based on interval...

CASC research in machine learning robustness debuts at AAAI conference

Feb. 10, 2021 -

LLNL’s Center for Applied Scientific Computing (CASC) has steadily grown its reputation in the artificial intelligence (AI)/machine learning (ML) community—a trend continued by three papers accepted at the 35th AAAI Conference on Artificial Intelligence, held virtually on February 2–9, 2021. Computer scientists Jayaraman Thiagarajan, Rushil Anirudh, Bhavya Kailkhura, and Peer-Timo Bremer led...

Lawrence Livermore computer scientist heads award-winning computer vision research

Jan. 8, 2021 -

The 2021 IEEE Winter Conference on Applications of Computer Vision (WACV 2021) on Wednesday announced that a paper co-authored by LLNL computer scientist Rushil Anirudh received the conference’s Best Paper Honorable Mention award based on its potential impact to the field. The paper, titled "Generative Patch Priors for Practical Compressive Image Recovery,” introduces a new kind of prior—a...

NeurIPS papers aim to improve understanding and robustness of machine learning algorithms

Dec. 7, 2020 -

The 34th Conference on Neural Information Processing Systems (NeurIPS) is featuring two papers advancing the reliability of deep learning for mission-critical applications at LLNL. The most prestigious machine learning conference in the world, NeurIPS began virtually on Dec. 6. The first paper describes a framework for understanding the effect of properties of training data on the...

LLNL papers accepted into prestigious conference

July 9, 2020 -

Two papers featuring LLNL scientists were accepted in the 2020 International Conference on Machine Learning (ICML), one of the world’s premier conferences of its kind. Read more at LLNL News.

DL-based surrogate models outperform simulators and could hasten scientific discoveries

June 17, 2020 -

Surrogate models supported by neural networks can perform as well, and in some ways better, than computationally expensive simulators and could lead to new insights in complicated physics problems such as inertial confinement fusion (ICF), LLNL scientists reported. Read more at LLNL News.

Lab team studies calibrated AI and deep learning models to more reliably diagnose and treat disease

May 29, 2020 -

A team led by LLNL computer scientist Jay Thiagarajan has developed a new approach for improving the reliability of artificial intelligence and deep learning-based models used for critical applications, such as health care. Thiagarajan recently applied the method to study chest X-ray images of patients diagnosed with COVID-19, arising due to the novel SARS-Cov-2 coronavirus. Read more at LLNL...

AI identifies change in microstructure in aging materials

May 26, 2020 -

LLNL scientists have taken a step forward in the design of future materials with improved performance by analyzing its microstructure using AI. The work recently appeared online in the journal Computational Materials Science. Read more at LLNL News.

Interpretable AI in healthcare (PODCAST)

May 17, 2020 -

LLNL's Jay Thiagarajan joins the Data Skeptic podcast to discuss his recent paper "Calibrating Healthcare AI: Towards Reliable and Interpretable Deep Predictive Models." The episode runs 35:50. Listen at Data Skeptic.

The incorporation of machine learning into scientific simulations at LLNL (VIDEO)

May 5, 2020 -

In this video from the Stanford HPC Conference, Katie Lewis presents "The Incorporation of Machine Learning into Scientific Simulations at Lawrence Livermore National Laboratory." Read more and watch the video at insideHPC.

Deep learning may provide solution for efficient charging, driving of autonomous electric vehicles

Feb. 4, 2020 -

LLNL computer scientists and software engineers have developed a deep learning-based strategy to maximize electric vehicle (EV) ride-sharing services while reducing carbon emissions and the impact to the electrical grid, emphasizing autonomous EVs capable of offering 24-hour service. Read more at LLNL News.

Successful simulation and visualization coupling proves the power of Sierra

Oct. 22, 2019 -

As the first National Nuclear Security Administration (NNSA) production supercomputer backed by GPU- (graphics processing unit) accelerated architecture, Sierra’s acquisition required a fundamental shift in how scientists at Lawrence Livermore National Laboratory (LLNL) program their codes to take advantage of the GPUs.

The majority of Sierra’s computational power—95 percent of its 125...

LLNL Center for Applied Scientific Computing: accelerating scientific discovery (VIDEO)

July 12, 2019 -

The Center for Applied Scientific Computing (CASC) serves as LLNL’s window to the broader computer science, computational physics, applied mathematics, and data science research communities. Major thrust areas in CASC research include: (1) Increasing simulation fidelity by integrating multi-physics and multi-scale models, increasing resolution through advanced numerical methods and more...