Feb. 10, 2021

Lab researchers explore ‘learn-by-calibration’ approach to deep learning to accurately emulate scientific process

Jeremy Thomas/LLNL

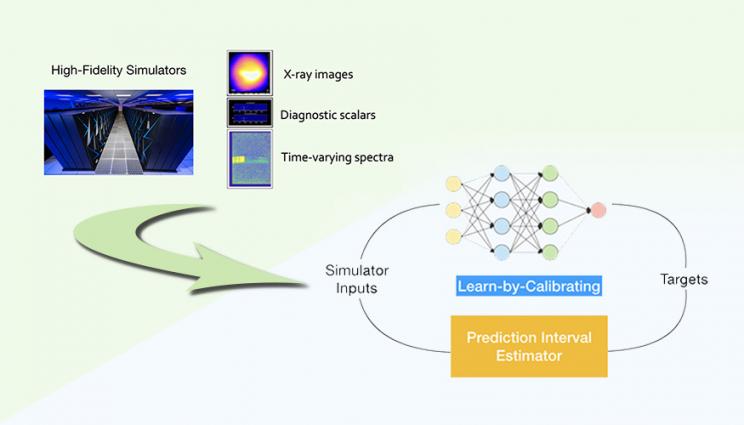

An LLNL team has developed a “Learn-by-Calibrating” method for creating powerful scientific emulators that could be used as proxies for far more computationally intensive simulators. Researchers found the approach results in high-quality predictive models that are closer to real-world data and better calibrated than previous state-of-the-art methods. The LbC approach is based on interval calibration, which has been used traditionally for evaluating uncertainty estimators, as a training objective to build deep neural networks. Through this novel learning strategy, LbC can effectively recover the inherent noise in data without the need for users to pick a loss function, according to the team. Read more at LLNL News.