Successful simulation and visualization coupling proves the power of Sierra

As the first National Nuclear Security Administration (NNSA) production supercomputer backed by GPU- (graphics processing unit) accelerated architecture, Sierra’s acquisition required a fundamental shift in how scientists at Lawrence Livermore National Laboratory (LLNL) program their codes to take advantage of the GPUs.

The majority of Sierra’s computational power—95 percent of its 125 petaFLOPs (floating point operations per second)—resides on its GPU accelerators; thus, codes must effectively leverage the GPUs to fully utilize the machine.

“Sierra was a paradigm shift from the systems we’ve run on in the past because of its heterogeneous compute and memory architecture,” says Cyrus Harrison, a computer scientist who supports several of Livermore’s visualization tools. “Adapting to the new architecture posed major challenges to applications developers and system software and tool providers and required innovation in both the simulation and visualization infrastructures.”

Harrison notes an additional consideration the code teams had to contend with on Sierra. “Computation far outpaces the system’s current ability to save data to the file system commonly used for postprocessing analysis,” he explains. “This limits the frequency that data can be written out to the file system. In order to visualize the data with high temporal fidelity, data must be processed while in memory, requiring the simulation and visualization routines to be coupled. This coupling is known as in-situ visualization.”

After several years of preparation, including the creation of new tools and the porting of very large, fundamental codes, LLNL demonstrated, in the fall of 2018, a successful simulation and visualization coupling using 16,384 GPUs on 4,096 nodes of Sierra. LLNL researchers ran Ares, a GPU-enabled multiphysics code, coupled with Ascent, an in-situ visualization tool capable of utilizing the same resources as the simulation.

“This was the first large-scale demonstration of using some of this technology very successfully on GPUs,” says Harrison. “We’re excited about that, and we expect this is the path that we will continue on, using this software for exascale.”

Readying a 20-Year-Old Code for a New Architecture

Ares is a massively parallel, multiblock, structured mesh, multiphysics code. It supports an array of physics, including arbitrary Lagrangian–Eulerian (ALE) hydrodynamics, radiation, lasers, high-explosive models, magneto hydrodynamics (MHD), thermonuclear burn, particulate flow, dynamic mixing, plasma physics, material strength, and constitutive models. Ares is used to model various experiments, such as high-explosive characterizations, munitions development, inertial confinement fusion (ICF) and other laser-driven experiments, explosively driven pulsed power, Z-pinch, and hydrodynamic instability experiments.

The Ares source code is large—over half a million lines of code—and used routinely. According to Mike Collette, longtime code porter and developer on the Ares team, Ares is in daily use on NNSA’s supercomputers and must maintain its ability to meet mission needs. Consequently, the team has a requirement to preserve a single-source code base while making the code portable and able to run on old, new, and future systems.

Collette builds Ares regularly with a variety of compilers to ensure that non-portable code is not introduced. Any unavoidable platform-specific code, such as system calls, is hidden behind functions, macros, or header files. In order to leverage Sierra’s GPUs, Collette and the rest of the team transformed Ares loops with the Livermore-developed RAJA, a loop-based abstraction that provides architecture portability. This work began in 2014. RAJA is the foundation of Livermore’s strategy to make its codes platform agnostic. Developed in tandem with the upgrades to Ares, RAJA provides an abstraction layer that was key to incrementally porting Ares’s physics algorithms to GPUs. The team also added portable memory allocation abstractions to ensure memory coalesced optimally on the GPUs and restructured some loop kernels for better GPU efficiency. Some loops required changes to remove data race conditions to ensure code correctness when parallelized.

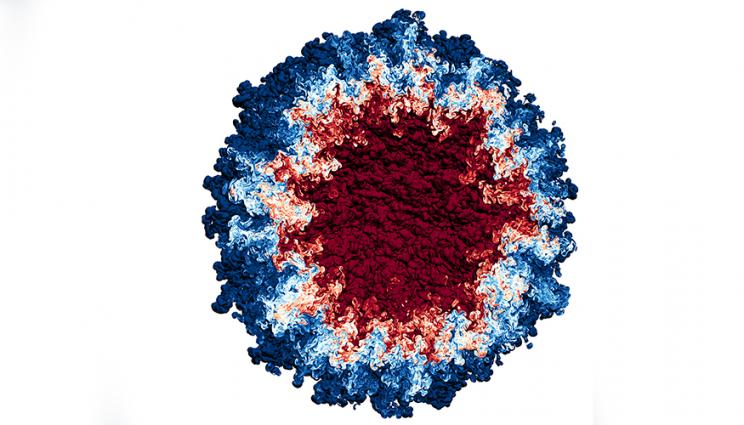

“We transformed Ares loops with RAJA one by one, in a prioritized manner,” says Collette. Ares has over 5,000 mesh loops, so the process was slow and methodical. But the work paid off in spades. In late 2018, Collette ran a 98-billion-element Ares code physics simulation of two-fluid mixing in a spherical geometry, an important hydrodynamics problem with applications in several LLNL missions. The timeframe and performance of this Ares run on Sierra was also significant—it helped demonstrate the capability of Sierra by contributing to a programmatic milestone.

In general, running on Sierra’s GPUs allows Livermore’s code teams to scale and turn around massive simulations in a power- and time-efficient way. For the Ares simulation, the physics calculations alone took 60 hours of compute time on Sierra. The team estimates the same problem would take 30 days of physics compute time on Sierra’s predecessor Advanced Technology System, Sequoia.

“Not only has the work we’ve done so far in porting our codes helped unlock impressive performance on Sierra, it positions the codes well for future architectures,” says Collette.

Generating High-Fidelity Images in Memory While a Simulation Runs

Accompanying the initial Ares run on Sierra was LLNL’s new, in-situ visualization capability, Ascent. When linked directly into a code, this capability allows users to see results of high-fidelity simulations that would have been difficult, if not impossible, to visualize with standard post-processing visualization tools. During the Ares simulation, Ascent was used to generate images at high temporal fidelity and to selectively save out compressed portions of the data set for post-hoc analysis.

Ascent is a fly-weight in-situ infrastructure and analysis library being developed within the ALPINE project, which is part of the Department of Energy’s Exascale Computing Project (ECP). An integral aspect of the move toward exascale computing and systems 50 times more power than today’s is the advancement of tools that will allow results to be seen while a simulation runs—a process called in-situ visualization and analysis. The primary benefit of this process is that it allows input/output bottlenecks to be circumvented.

Many computing community tools allow for in-situ visualization and analysis, but Ascent is the first meant for pre-exascale and beyond supercomputers, explains Harrison.

“The aim of Ascent is to create a next-generation in-situ visualization structure that is built from the ground up to use the node-level parallelism that is available on systems like Sierra,” Harrison explains. “We can make more pictures in memory rather than wait for the file systems, which creates efficiencies during the run. It allows scientists to explore their results with high temporal fidelity, and it creates image databases that can be used for interactive exploration after the simulation is complete.”

Harrison added that the infrastructure that he and his team members are generating is not only available in Ascent but also in other in-situ visualization tools such as VisIt and ParaView, which will continue to be used for post-hoc visualization.

Ascent leverages the capabilities made possible by another ECP project, VTK-m (Visualization Toolkit-multi-cores), which allows exploitation of the node-level parallelism of GPUs and computers with multi-core or many-core processors such that operations can be executed directly on the same architectures on which the simulations are running. VTK-m provides performance portable implementations of key visualization algorithms that can exploit node-level parallelism on new computer architectures. Harrison explains, “To run in situ, we need our visualization to be able to run on the same hardware as the simulation code. That’s where VTK-m comes in.”

Ascent will eventually be implemented in several LLNL codes to provide in-situ visualization capabilities for mission-critical problems, including those that support stockpile stewardship. Ascent is already integrated with Livermore’s MARBL code for high-energy-density physics, and it is being adopted by other national laboratories through ECP.

(The image above is a frame from the 2018 Ares + Ascent in-situ run on Livermore’s Sierra supercomputer. It shows turbulent fluid mixing in a spherical geometry—part of a simulation of an idealized inertial confinement fusion implosion. When combined with upgraded codes such as Ares and innovative tools such as Ascent, Sierra will make high-fidelity calculations like these routine instead of heroic and will yield insight into physical phenomena.)