Did you know we have a monthly newsletter? View past volumes and subscribe.

Igniting scientific discovery with AI and supercomputing (VIDEO)

April 15, 2024 -

LLNL’s fusion ignition breakthrough, more than 60 years in the making, was enabled by a combination of traditional fusion target design methods, high-performance computing (HPC), and AI techniques. The success of ignition marks a significant milestone in fusion energy research, and was facilitated in part by the precision simulations and rapid experimental data analysis only possible through...

Welcome new DSI team members

April 2, 2024 -

When Data Science Institute (DSI) director Brian Giera and deputy director Cindy Gonzales began planning activities for fiscal year 2024 and beyond, they immediately realized that LLNL’s growth in data science and artificial intelligence (AI)/machine learning (ML) research requires corresponding growth in the DSI’s efforts. “Our field is booming,” Giera states. “The Lab has a stake in the...

Will it bend? Reinforcement learning optimizes metamaterials

Dec. 13, 2023 -

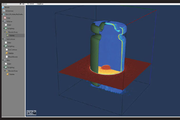

Lawrence Livermore staff scientist Xiaoxing Xia collaborated with the Technical University of Denmark to integrate machine learning (ML) and 3D printing techniques. The effort naturally follows Xia’s PhD work in materials science at the California Institute of Technology, where he investigated electrochemically reconfigurable structures. In a paper published in the Journal of Materials...

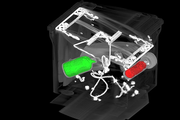

For better CT images, new deep learning tool helps fill in the blanks

Nov. 17, 2023 -

At a hospital, an airport, or even an assembly line, computed tomography (CT) allows us to investigate the otherwise inaccessible interiors of objects without laying a finger on them. To perform CT, x-rays first shine through an object, interacting with the different materials and structures inside. Then, the x-rays emerge on the other side, casting a projection of their interactions onto a...

Lab partners with new Space Force Lab

Nov. 14, 2023 -

LLNL subject matter experts have been selected by the U.S. Space Force to help stand up its newest Tools, Applications, and Processing (TAP) laboratory dedicated to advancing military space domain awareness (SDA). The Livermore team attended the October 26 kickoff in Colorado Springs of the SDA TAP lab’s Project Apollo technology accelerator, designed with an open framework to support and...

LLNL’s Kailkhura elevated to IEEE senior member

Nov. 8, 2023 -

IEEE, the world’s largest technical professional organization, has elevated LLNL research staff member Bhavya Kailkhura to the grade of senior member within the organization. IEEE has more than 427,000 members in more than 190 countries, including engineers, scientists and allied professionals in the electrical and computer sciences, engineering and related disciplines. Just 10% of IEEE’s...

Data Science Summer Institute hosts student interns from Japan

Oct. 13, 2023 -

The Data Science Summer Institute (DSSI) hosted summer student interns from Japan on-site for the first time, where the students worked with Lab mentors on real-world projects in AI-assisted bio-surveillance and automated 3D printing. From June to September, the three students—Raiki Yoshimura, Shinnosuke Sawano and Taisei Saida—lived in rental apartments near the Lab and worked at the Lab on...

LLNL, University of California partner for AI-driven additive manufacturing research

Sept. 27, 2023 -

Grace Gu, a faculty member in mechanical engineering at UC Berkeley, has been selected as the inaugural recipient of the LLNL Early Career UC Faculty Initiative. The initiative is a joint endeavor between LLNL’s Strategic Deterrence Principal Directorate and UC national laboratories at the University of California Office of the President, seeking to foster long-term academic partnerships and...

Explainable artificial intelligence can enhance scientific workflows

July 25, 2023 -

As ML and AI tools become more widespread, a team of researchers in LLNL’s Computing and Physical and Life Sciences directorates are trying to provide a reasonable starting place for scientists who want to apply ML/AI, but don’t have the appropriate background. The team’s work grew out of a Laboratory Directed Research and Development project on feedstock materials optimization, which led to...

Machine learning reveals refreshing understanding of confined water

July 24, 2023 -

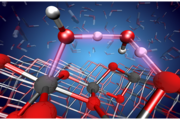

LLNL scientists combined large-scale molecular dynamics simulations with machine learning interatomic potentials derived from first-principles calculations to examine the hydrogen bonding of water confined in carbon nanotubes (CNTs). They found that the narrower the diameter of the CNT, the more the water structure is affected in a highly complex and nonlinear fashion. The research appears on...

High-performance computing, AI and cognitive simulation helped LLNL conquer fusion ignition

June 21, 2023 -

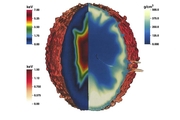

For hundreds of LLNL scientists on the design, experimental, and modeling and simulation teams behind inertial confinement fusion (ICF) experiments at the National Ignition Facility, the results of the now-famous Dec. 5, 2022, ignition shot didn’t come as a complete surprise. The “crystal ball” that gave them increased pre-shot confidence in a breakthrough involved a combination of detailed...

Visionary report unveils ambitious roadmap to harness the power of AI in scientific discovery

June 12, 2023 -

A new report, the product of a series of workshops held in 2022 under the guidance of the U.S. Department of Energy’s Office of Science and the National Nuclear Security Administration, lays out a comprehensive vision for the Office of Science and NNSA to expand their work in scientific use of AI by building on existing strengths in world-leading high performance computing systems and data...

Consulting service infuses Lab projects with data science expertise

June 5, 2023 -

A key advantage of LLNL’s culture of multidisciplinary teamwork is that domain scientists don’t need to be experts in everything. Physicists, chemists, biologists, materials engineers, climate scientists, computer scientists, and other researchers regularly work alongside specialists in other fields to tackle challenging problems. The rise of Big Data across the Lab has led to a demand for...

Data science meets fusion (VIDEO)

May 30, 2023 -

LLNL’s historic fusion ignition achievement on December 5, 2022, was the first experiment to ever achieve net energy gain from nuclear fusion. However, the experiment’s result was not actually that surprising. A team leveraging data science techniques developed and used a landmark system for teaching artificial intelligence (AI) to incorporate and better account for different variables and...

LLNL and SambaNova Systems announce additional AI hardware to support Lab’s cognitive simulation efforts

May 23, 2023 -

LLNL and SambaNova Systems have announced the addition of a spatial data flow accelerator into the Livermore Computing Center, part of an effort to upgrade the Lab’s CogSim program. LLNL will integrate the new hardware to further investigate CogSim approaches combining AI with high-performance computing—and how deep neural network hardware architectures can accelerate traditional physics...

Celebrating the DSI’s first five years

May 18, 2023 -

View the LLNL Flickr album Data Science Institute Turns Five.

Data science—a field combining technical disciplines such as computer science, statistics, mathematics, software development, domain science, and more—has become a crucial part of how LLNL carries out its mission. Since the DSI’s founding in 2018, the Lab has seen tremendous growth in its data science community and has invested...

Patent applies machine learning to industrial control systems

May 8, 2023 -

An industrial control system (ICS) is an automated network of devices that make up a complex industrial process. For example, a large-scale electrical grid may contain thousands of instruments, sensors, and controls that transfer and distribute power, along with computing systems that capture data transmitted across these devices. Monitoring the ICS network for new device connections, device...

Computing codes, simulations helped make ignition possible

April 6, 2023 -

Harkening back to the genesis of LLNL’s inertial confinement fusion (ICF) program, codes have played an essential role in simulating the complex physical processes that take place in an ICF target and the facets of each experiment that must be nearly perfect. Many of these processes are too complicated, expensive, or even impossible to predict through experiments alone. With only a few...

Fueling up hydrogen production

April 3, 2023 -

Through machine learning, an LLNL scientist has a better grasp of understanding materials used to produce hydrogen fuel. The interaction of water with TiO2 (titanium oxide) surfaces is especially important in various scientific fields and applications, from photocatalysis for hydrogen production to photooxidation of organic pollutants to self-cleaning surfaces and biomedical devices. However...

From plasma to digital twins

March 13, 2023 -

LLNL's Nondestructive Evaluation (NDE) group has an array of techniques at its disposal for inspecting objects’ interiors without disturbing them: computed tomography, optical laser interferometry, and ultrasound, for example, can be used alone or in combination to gauge whether a component’s physical and material properties fall within allowed tolerances. In one project, the team of NDE...