Explainable artificial intelligence can enhance scientific workflows

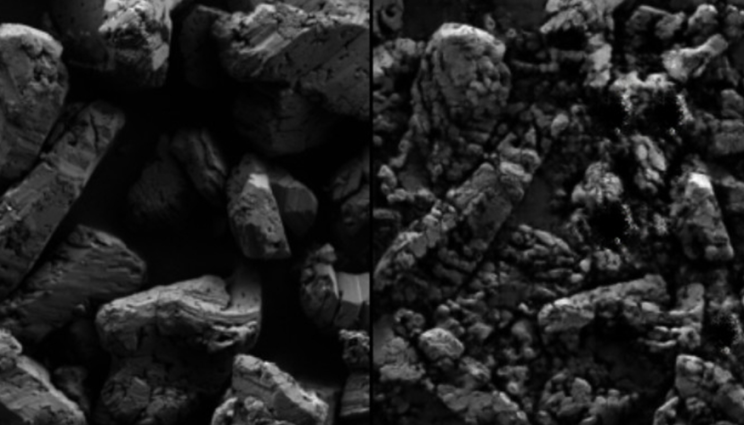

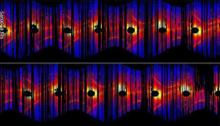

As ML and AI tools become more widespread, a team of researchers in LLNL’s Computing and Physical and Life Sciences directorates are trying to provide a reasonable starting place for scientists who want to apply ML/AI, but don’t have the appropriate background. The team’s work grew out of a Laboratory Directed Research and Development project on feedstock materials optimization, which led to a pair of papers about the types of questions a materials scientist may encounter when using ML tools, and how these tools behave. Explainable artificial intelligence (XAI) is an emerging field that helps interpret complicated machine learning models, providing an entry point to new applications. XAI may use tools like visualizations that identify which features a neural network finds important in a dataset, or surrogate models to explain more complex concepts. Read more at LLNL Computing.