Machine learning tool fills in the blanks for satellite light curves

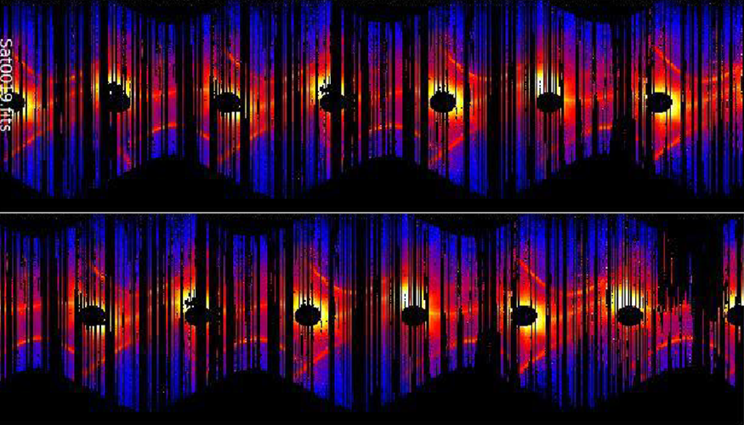

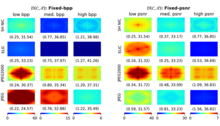

When viewed from Earth, objects in space are seen at a specific brightness, called apparent magnitude. Over time, ground-based telescopes can track a specific object’s change in brightness. This time-dependent magnitude variation is known as an object’s light curve, and can allow astronomers to infer the object’s size, shape, material, location, and more. Monitoring the light curve of satellites or debris orbiting the earth can help identify changes or anomalies in these bodies. However, light curves are missing a lot of data points. The weather, the season, dust accumulation, time of day, eclipses—these all affect not only the quality of the data, but whether it can be taken at all. Livermore researchers have developed a machine learning (ML) process for light curve modeling and prediction. Called MuyGPs, the process drastically reduces the size of a conventional Gaussian process problem—a type of statistical process that often does not scale well—by limiting the correlation of predictions to their nearest neighboring data points, reducing a large linear algebra problem to many smaller, parallelizable problems. This type of ML enables training on more sensitive parameters, optimizing the efficient prediction of the missing data. Read more at LLNL Computing.