Conference paper illuminates neural image compression

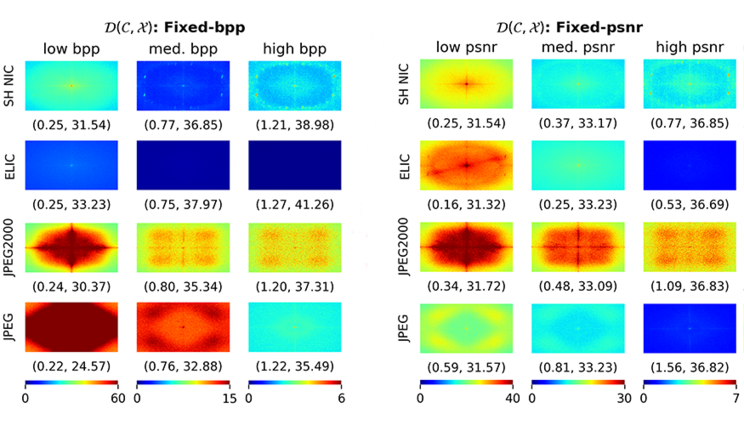

An enduring question in machine learning (ML) concerns performance: How do we know if a model produces reliable results? The best models have explainable logic and can withstand data perturbations, but performance analysis tools and datasets that will help researchers meaningfully evaluate these models are scarce. A team from LLNL’s Center for Applied Scientific Computing (CASC) is teasing apart performance measurements of ML-based neural image compression (NIC) models to inform real-world adoption. NIC models use ML algorithms to convert image data into numerical representations, providing lightweight data transmission in low-bandwidth scenarios. For example, a drone with limited battery power and storage must send compressed data to the operator without destroying or losing any important information. CASC researchers James Diffenderfer and Bhavya Kailkhura, with collaborators from Duke University and Thomson Reuters Labs, co-authored a paper investigating the robustness of NIC methods. The research was accepted to the 2023 Conference on Neural Information Processing Systems (NeurIPS), held December 10–16 in New Orleans. Founded in 1987, the conference is one of the top annual events in ML and computer vision research. Read more at LLNL Computing.