March 31, 2024

Gradient-Free Deep Learning for Large-Scale Problems

Zeroth-order (ZO) optimization algorithms enable the training of non-differentiable machine learning (ML) pipelines by iteratively estimating the gradient (i.e., the slope of a function) and updating the solution accordingly. This type of optimization has proved useful for relatively small-scale ML problems, but effectively scaling ZO algorithms to train deep neural networks can come at the expense of solution accuracy.

Developed by LLNL researchers James Diffenderfer, Bhavya Kailkhura, and Konstantinos Parasyris along with colleagues from Michigan State University and the University of California (UC), Santa Barbara, a new deep learning framework called DeepZero overcomes the scalability challenge with a smarter model training pipeline. The International Conference on Learning Representations (ICLR)—a premier ML event taking place in May—will feature the team’s paper “DeepZero: Scaling Up Zeroth-Order Optimization for Deep Model Training.”

Compared to other ZO optimization methods, DeepZero achieves scalable computational efficiency and accuracy via its novel gradient estimation scheme, model training methodology, and elimination of redundant computations. When tested on datasets of various sizes, DeepZero demonstrates state-of-the-art performance in image classification tasks and black-box deep learning applications.

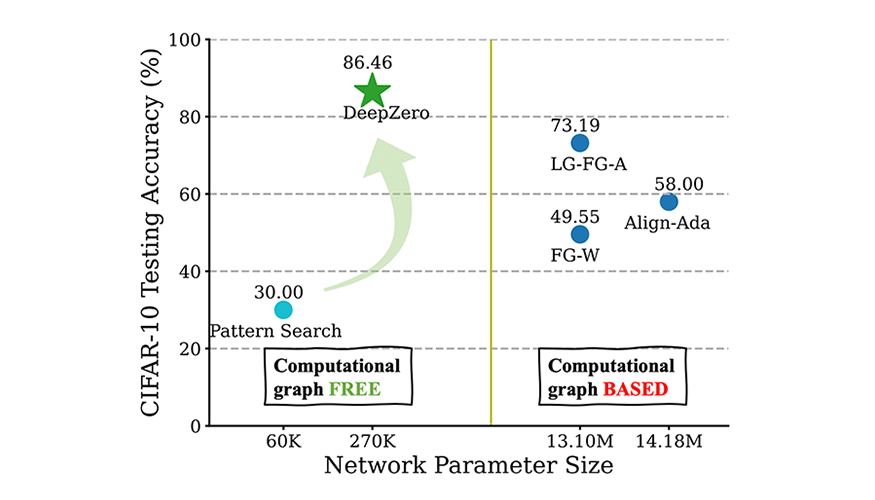

“Our ZO optimization tool can produce solutions close in quality to first-order gradient-based methods, the default ML training approach. But more importantly, it serves as a strategy for handling non-differentiable ML training pipelines,” states Diffenderfer. (Image at left: Accuracy of DeepZero [green star] compared to other methods using CIFAR-10 image classification datasets.)

High-Speed, Low-Error Latent Space Dynamics Framework

ML techniques can boost the effectiveness of reduced order models (ROMs), which simplify a simulation’s numerical computations. One approach, known as latent space dynamics identification (LaSDI), uses neural networks to transform high-fidelity data into simpler, low-dimensional data. However, LaSDI can produce inaccuracies in the interpolation of the ordinary differential equation coefficients governing the latent space—in other words, producing inaccurate prediction in certain regions of the parameter space and accurate prediction in others.

Recent advances in LaSDI include iterative data sampling and simultaneous training of the neural networks, ultimately increasing model robustness. But LaSDI methods stumble when the underlying physics information through the residual—physics violation of the model’s predicted data values—is unknown or difficult to compute. In a recent issue of Computer Methods in Applied Mechanics and Engineering, LLNL researchers along with a Cornell University colleague proposed a new LaSDI approach enhanced with Gaussian processes (GPs).

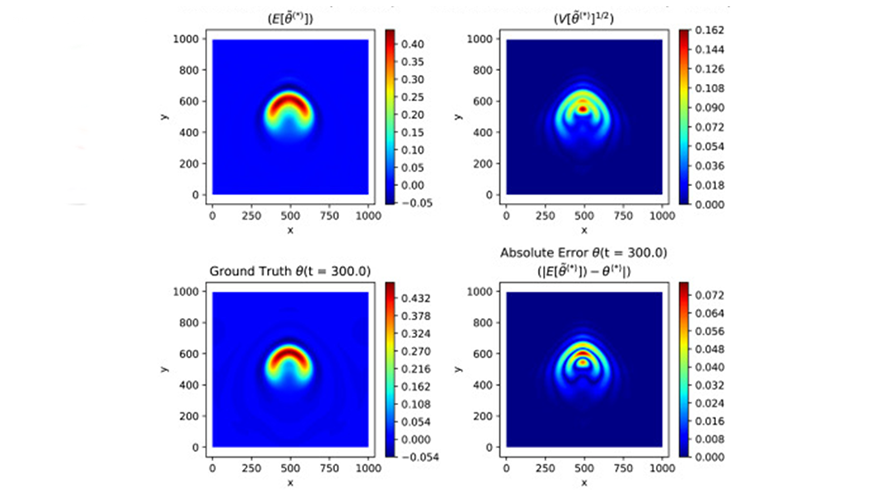

The team’s GPLaSDI framework enables uncertainty quantification of ROM predictions and allows for efficient adaptive training through prioritized selection of additional training data points. By using GPs for latent space interpolation, GPLaSDI is able to achieve better performance (several hundred to tens of thousands of times) with less error than other LaSDI methods. (Image at left: Latent space dynamics for a rising thermal bubble simulation with a runtime of 300 seconds.)

“GPLaSDI captures latent space dynamics and provides uncertainty quantification in the ROM context. Its value for scientific and engineering applications comes from its ability to autonomously select training data points and generate confidence intervals,” explains co-author Youngsoo Choi. To learn more about ROMs and related research at LLNL, visit the libROM website.

Join LLNL at AI for Critical Infrastructure Workshop

The 33rd annual International Joint Conference on Artificial Intelligence (IJCAI) will be held in Jeju Island, South Korea. DSI seminar and workshop coordinator Felipe Leno da Silva and Center for Advanced Signal and Image Sciences director Ruben Glatt are co-hosting an IJCAI workshop on critical infrastructures (CIs) and invite contributions by April 26. Visit the workshop website for paper submission guidelines. The workshop will take place on one day during the August 3–5 timeframe (exact date to be determined).

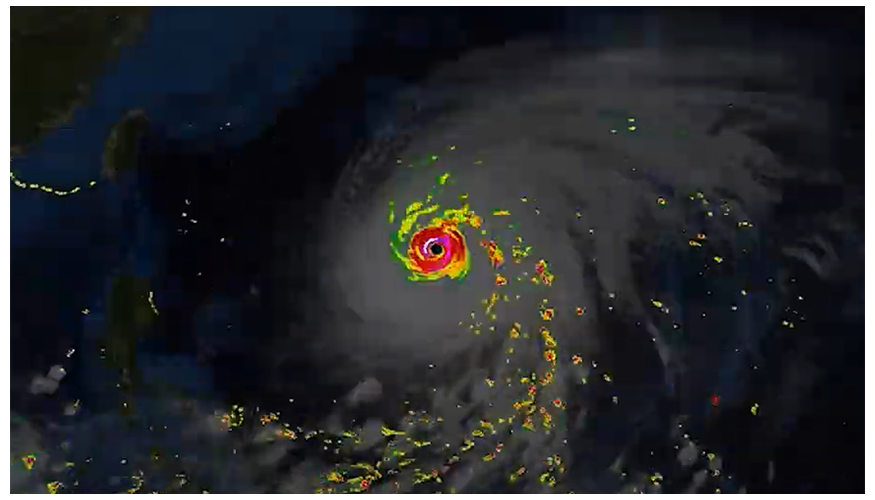

CIs are systems related to the delivery of vital public services, such as transportation systems, energy generation and transmission, telecommunication networks, hospitals, and information technology infrastructure. While the preservation, improvement, and reliability of these systems are a priority for the government and industry sectors, disruptions are common due to the complexity and multitude of factors that contribute to the operability of those services. The recent increase in connectivity and fast-paced AI advances bring new opportunities and risks to CI. AI systems can be introduced to increase efficiency and reliability. They can also be used to help repel sophisticated cyberattacks and mitigate natural disturbances, for example, the ones caused by weather issues intensified by global warming.

However, applying and evaluating AI techniques in CI is not a trivial matter due to the complexity and impact of these systems. How do we ensure the safety of the public and critical infrastructure when AI systems become increasingly more autonomous? This CI workshop aims to establish an intellectual forum where cutting-edge empirical and theoretical advancements in AI applied to CIs can be shared and scrutinized. The workshop also serves as an incubator for identifying emerging problems where AI can be instrumental.

Video: Predicting Climate Change Impacts on Infrastructure

At LLNL, electrical grid experts and climate scientists work together to bridge the gap between infrastructure and climate modeling. By taking weather variables such as wildfire, flooding, wind, and sunlight that directly impact the electrical grid into consideration, researchers can improve electrical grid model projections for a more stable future. In a new video, LLNL computer scientist Jean-Paul Watson and atmospheric physicist Philip Cameron-Smith explain how the Lab uses computer modeling and supercomputers to address the impacts of climate change and increase the resilience of the nation's infrastructure. Climate models inform electrical grid models, and ML algorithms trained on the former help researchers improve the latter.

Seminar Explores AI Applied to Geographic Data

The DSI’s February seminar was presented by Shawn Newsam, Professor of Computer Science and Engineering and Founding Faculty at the University of California, Merced. In “GeoAI: Past, Present, and Future,” Newsam discussed two themes: (1) Spatial data is special in that space (and time) provides a rich context in which to analyze it. The challenge is how to incorporate spatial context into AI methods when adapting or developing them for geographic data—that is, to make them spatially explicit. (2) Location is a powerful key (in the database sense) that allows us to associate large amounts of different kinds of data. This can be especially useful, for example, for generating large collections of weakly labelled data when training ML models. The seminar also explored near-term opportunities in GeoAI related to foundation models particularly for multimodal data.

Speakers’ biographies and abstracts are available on the seminar series web page, and many recordings are posted to the YouTube playlist. To become or recommend a speaker for a future seminar, or to request a WebEx link for an upcoming seminar if you’re outside LLNL, contact DSI-Seminars [at] llnl.gov (DSI-Seminars[at]llnl[dot]gov).

Meet One LLNL Data Scientist…

Qingkai Kong is a staff scientist supporting LLNL’s Geophysics Monitoring Program, Nuclear Threat Reduction, and the Atmospheric, Earth, and Energy Division where he contributes to a variety of ML-focused projects. As a part of the Source Physics Experiment project, Kong and colleagues use physics to improve the generalization capabilities of their ML model. He is heavily involved in seismology and ML through the Laboratory Directed Research and Development Program and the Low-Yield Nuclear Monitoring project, as well as carbon storage research through the SMART (Science-informed Machine Learning for Accelerating Real Time Decisions in Subsurface Applications) project. Kong enjoys the constant learning opportunities that his work offers, stating, “Combining decades of physics knowledge with recently developed deep learning methods is both an exciting and challenging area.” He is also an active LLNL community member—mentoring summer interns, speaking at an annual meeting of the Seismological Society of America, leading the Machine Learning in Seismology discussion group—and coaches a local children’s soccer team. Prior to joining the Lab 2021, Kong was a data science researcher at UC Berkeley and a researcher in Google’s visiting faculty program. He earned his PhD in geophysics from UC Berkeley.

…and Another!

Aisha Dantuluri is a staff data scientist in LLNL’s Applications, Simulations, and Quality division. Her projects mainly contribute to the Lab’s Weapon Physics and Design program. She uses ML for image processing to make better quantitative use of simulated and experimental radiographic data for stockpile certification. She also works on automating time series analysis in the Diagnostics Development Group, which involves jump off detection in photo Doppler velocimetry data. Data science inspires Dantuluri due to its collaborative nature and range of applications. “Everybody is open to sharing knowledge, and I think that makes data science a fun thing to work on. You can pick any problem in any field, and they probably need a data scientist,” she said. Since she began working at LLNL in 2020, Dantuluri has sought to improve the sense of community amongst data scientists in different organizations. She is the current ML working group coordinator for WPD and hopes to foster more involvement with the Strategic Deterrence organization’s data science community in the future. Dantuluri earned a master’s degree in computational science at UC San Diego and a bachelor’s degree in engineering physics at the Indian Institute of Technology, Hyderabad.