July 18, 2023

Open Data Initiative Adds CFD Simulation Dataset

The DSI’s Open Data Initiative (ODI) recently added a new project to the catalog: Computational Fluid Dynamics Simulation Data of Spatial Deposition. In fluid mechanics problems, computational fluid dynamics (CFD) uses data structures and numerical analysis to investigate the flow of liquids and gases. For instance, CFD models can simulate atmospheric transport and dispersion, as in this dataset’s simulations of wind-driven pollutant dispersion and deposition. The data is then used to train machine learning models that, in turn, can predict spatial patterns with high accuracy.

This new addition to the ODI catalog includes 16,000 CFD simulations—15,000 training cases and 1,000 test cases—post-processed for machine learning training. The physics problem is a 2D spatial pattern formed from a pollutant that has been released into the atmosphere and dispersed for up to an hour while undergoing deposition to the surface.

Like many ODI offerings, this more than 6-gigabyte dataset is also available in the UC San Diego library’s digital collection. The data was prepared by LLNL researchers M. Giselle Fernández-Godino, Donald Lucas, and Nipun Gunawardena, who collected it during research funded by the National Nuclear Security Administration, Defense Nuclear Nonproliferation Research and Development (NNSA DNN R&D). “We decided to release the dataset so others can benefit from our work in atmospheric transport,” says Fernández-Godino, a simulation data scientist in LLNL’s Atmospheric, Earth, and Energy Division.

MDS-Rely Website Spotlights LLNL

The Materials Data Science Reliability Center (MDS-Rely) is a National Science Foundation Industry University Cooperative Research Center between Case Western Reserve University and the University of Pittsburgh. The Center partners with government, academia, and industry to accelerate materials R&D through rigorous data science methods and analytics, ultimately improving product reliability and lifetime. LLNL is one of eight member organizations that help guide MDS-Rely’s research directions.

A recent Member Spotlight article summarizes the Lab’s materials and manufacturing expertise and vast application space—such as understanding how materials age, investigating material performance under extreme conditions, extending material lifetimes, optimizing feedstocks, developing additive manufacturing, and much more. DSI director Brian Giera, who has worked closely with MDS-Rely members since LLNL joined in 2021, states, “This relationship enables us to tap into a wider circle of data science researchers and capabilities, and we can provide students with exposure to LLNL via internship opportunities and our unique datasets.”

New Report Unveils Roadmap to Harness the Power of AI in Science

A new report, the product of a series of workshops held in 2022 under the guidance of the DOE’s Office of Science and NNSA, lays out a comprehensive vision to expand work in scientific use of artificial intelligence (AI) by building on existing strengths in world-leading high-performance computing systems and data infrastructure. The sessions brought together more than 1,000 scientists, engineers and staff from DOE labs, academia, and technology companies to talk about the rapidly emerging opportunities and challenges of scientific AI.

The report identifies six AI capabilities and describes their potential to transform DOE/NNSA program areas. These range from control of complex systems like power grids to foundation models like the large-language models behind generative AI programs such as ChatGPT. The report also lays out the cross-cutting technology needed to enable these AI-powered transformations. The report further describes scientific “grand challenges” where AI plays a major role in making progress toward solutions. These include improved climate models, the search for new quantum materials, new nuclear reactor designs for clean energy, and more.

HPC, AI, and CogSim Helped LLNL Conquer Fusion Ignition

For hundreds of LLNL scientists on the design, experimental, and modeling and simulation teams behind inertial confinement fusion (ICF) experiments at the National Ignition Facility, the results of the now-famous December 5, 2022, ignition shot didn’t come as a complete surprise. The “crystal ball” that gave them increased pre-shot confidence in a breakthrough involved a combination of detailed high-performance computing (HPC) design and a suite of methods combining physics-based simulation with machine learning. LLNL calls this cognitive simulation, or CogSim.

The detailed HPC design uses the world’s largest supercomputers and its most complicated simulation tools to help subject-matter experts choose new directions to improve experiments. CogSim then employs AI to couple hundreds of thousands of HPC simulations to the set of past ICF experiments. These CogSim tools are providing scientists with new views into the physics of ICF implosions and a more accurate predictive capability when considering parameters such as laser energy and target-design specifications.

“It’s almost like looking into the future, based on what we've seen in the past about what might happen,” says Brian Spears, deputy modeling lead for ICF. “Our traditional design tools and experts say, ‘These are the knobs that you should adjust,’ and then the new CogSim tools say, ‘Given those adjustments and patterns from prior experiments, that looks like it's going to be really successful.’”

DOE Data Days Return in October

The 2023 DOE Data Days (D3) Workshop will return to LLNL on October 24–26, held in person over three days from Tuesday to Thursday. Poster and presentation abstracts are now being accepted through August 4. Registration will open on August 21, which is also when presenters will be notified. Visit the D3 website for additional details.

D3 will provide a forum to collaborate on data management ideas and strategies and build an actionable plan forward to drive innovation and progress across DOE and NNSA. The workshop brings together data management practitioners, researchers, and project managers from DOE and the national laboratories to promote data management as a means to higher quality and more efficient research and analysis. Presentations and posters from the DOE data management community will cover five themes:

- Data Intensive Computing

- Cloud and Hybrid Data Management

- Data Access, Sharing, and Sensitivity

- Data Curation and Metadata Standards

- Data Governance and Policy

Breakout sessions will provide the opportunity to delve further into high interest topics in DOE data management and facilitate collaboration across institutions. Keynote speakers from DOE and other government agencies will join us to highlight policy and implementation strategy success in their work.

(Image at left: The D3 2022 report shows that participants came from 27 organizations across the DOE complex and its collaborators.)

Last Chance to Register for the August CASIS Workshop

LLNL’s Center for Advanced Signal and Image Sciences is hosting the CASIS 2023 Workshop on August 2–3 to explore the latest advancements in signal and image sciences. The free event is open to engineers, scientists, and students interested in signal and image sciences, and it will be held at the Livermore Valley Open Campus (LVOC). Attendees can enjoy outstanding presentations, connect with experts across a broad range of topics, and engage in stimulating discussions. The agenda includes talks, interactive poster sessions, and complimentary coffee and lunch breaks. Invited speakers will discuss fusion ignition, large language models, and quantum computing. The registration deadline is July 25. Visit the CASIS website for the agenda, workshop tracks, organizing committee, directions to LVOC, and more.

Conference Roundup

LLNL’s data science researchers have had papers accepted at several recent and upcoming conferences that recognize ground-breaking research and techniques. PDFs or abstracts are linked where available.

International Conference on Machine Learning (ICML):

- Compute-efficient deep learning: algorithmic trends and opportunities – Brian Bartoldson and Bhavya Kailkhura with a MosaicML colleague

- Target-aware generative augmentations for single-shot adaptation – Kowshik Thopalli and Jayaraman Thiagarajan with Arizona State University colleagues

Conference on Computer Vision and Pattern Recognition (CVPR):

- Cross-GAN auditing: Unsupervised identification of attribute level similarities and differences between pretrained generative models – Shusen Liu, Rushil Anirudh, Thiagarajan, and Peer-Timo Bremer with colleagues from Oregon State University

International Conference on Acoustics, Speech, and Signal Processing (ICASSP):

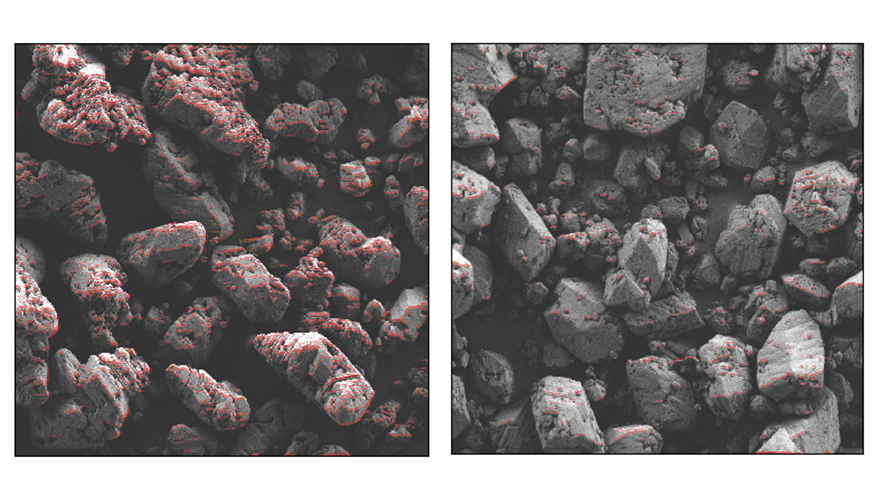

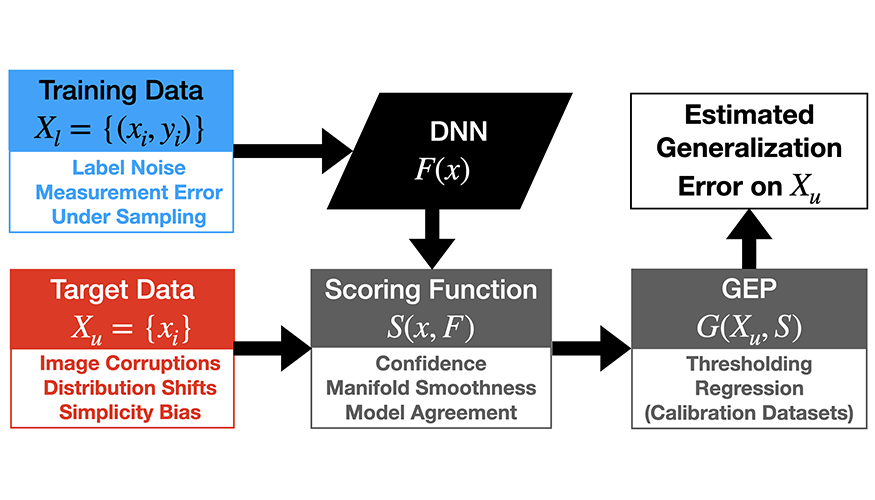

- On the efficacy of generalization error prediction scoring functions – Thiagarajan with University of Michigan colleagues (Image at left: generalization error prediction workflow.)

- Robust time series recovery and classification using test-time noise simulator networks – Anirudh with Arizona State University and Mitsubishi Electric Research Laboratories colleagues

International Conference on Autonomous Agents and Multiagent Systems (AAMAS):

- Multi-agent reinforcement learning for adaptive mesh refinement – Jiachen Yang, Ketan Mittal, Socratis Petrides, Brenden Petersen, Daniel Faissol, and Robert Anderson, with colleagues from Texas A&M University and Brown University

Medical Imaging with Deep Learning (MIDL):

- Know your space: inlier and outlier construction for calibrating medical OOD detectors – Yamen Mubarka, Anirudh, and Thiagarajan with colleagues from Arizona State University and Microsoft

Seminar Explores an Inverse Problem in Computer Vision and Graphics

At the DSI’s July 11 technical seminar, Dr. Sara Fridovich-Keil from Stanford University will present “Photorealistic Reconstruction from First Principles.” She discussed two dominant algorithmic approaches for solving inverse problems—compressed sensing and deep learning—then focused on an inverse problem central to computer vision and graphics: given calibrated photographs of a scene, recover the optical density and view-dependent color of every point in the scene.

Fridovich-Keil is a postdoctoral scholar at Stanford University, where she works on foundations and applications of machine learning and signal processing in computational imaging. She is currently supported by a National Science Foundation Mathematical Sciences Postdoctoral Research Fellowship. Fridovich-Keil received her PhD in electrical engineering and computer sciences from UC Berkeley and BSE in electrical engineering from Princeton University.

The next seminar is scheduled for Friday, July 28 and will feature Joyce Ho of Emory University discussing “Tensor Factorization for Biomedical Representation Learning.” Speakers’ biographies and abstracts are available on the seminar series web page, and many recordings are posted to the YouTube playlist. To become or recommend a speaker for a future seminar, or to request a WebEx link for an upcoming seminar if you’re outside LLNL, contact datascience [at] llnl.gov (datascience[at]llnl[dot]gov).

Meet an LLNL Data Scientist

Since joining the Lab’s Computational Engineering Division in 2022, Jiachen Yang has been developing machine learning (ML) methods that speed up computational antibody design for rapid response against new pathogens. He is excited about this intersection of ML and science, stating, “New ML techniques accelerate science by unlocking the latent information of large scientific datasets and simulations, while science provides new challenges such as interpretability and symmetry constraints that drive ML advances.” Yang also develops new deep reinforcement learning algorithms for adaptive mesh refinement, as described in papers accepted to the 2023 AISTATS and AAMAS conferences. He enjoys working on unsolved problems and creating new approaches for old problems—enthusiasm he will impart while mentoring a Data Science Summer Institute intern this year. “Having worked at multiple industry research labs during my graduate studies, I find that internships are valuable for sparking new ideas and research directions, and I find value in creating such opportunities for others,” says Yang. He holds a PhD in Machine Learning from Georgia Tech and is part of LLNL’s award-winning deep symbolic optimization team.