Aug. 25, 2022

Open Data Initiative Adds Simulated Cardiac Signals Dataset

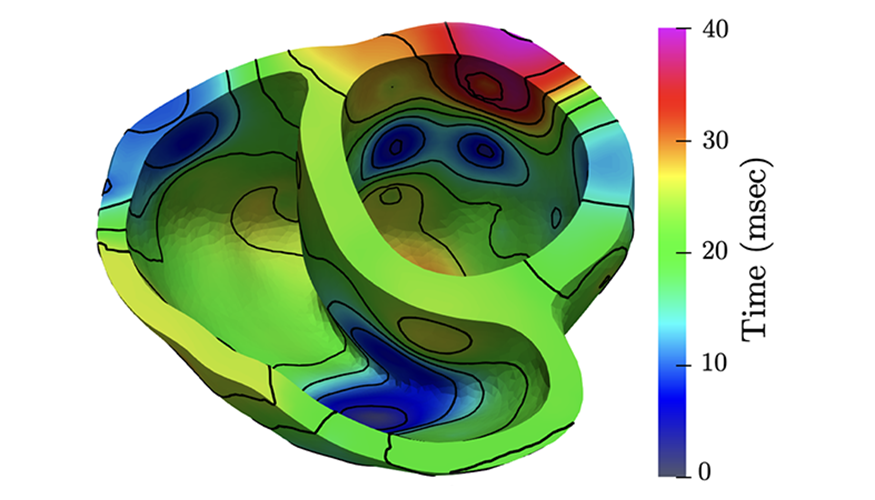

Building off LLNL’s Cardioid code, which simulates the electrophysiology of the human heart, a research team has conducted a computational study to generate a dataset of cardiac simulations at high spatiotemporal resolutions. The dataset—which is publicly available for further cardiac machine learning (ML) research via the DSI’s Open Data Initiative—was built using real cardiac bi-ventricular geometries and clinically inspired endocardial activation patterns under different physiological and pathophysiological conditions.

The dataset consists of pairs of computationally simulated intracardiac transmembrane voltage recordings and electrocardiogram (ECG) signals. In total, 16,140 organ-level simulations were performed on LLNL’s Lassen supercomputer, concurrently utilizing 4 GPUs and 40 CPU cores. Each simulation produced pairs of 500ms-by-12 ECG signals and 500ms-by-75 transmembrane voltage signals. The data was conveniently preprocessed and saved as NumPy arrays.

This project was funded by the Laboratory Directed Research and Development program (18-LW-078, principal investigator: Robert Blake), and the paper “Intracardiac Electrical Imaging Using the 12-Lead ECG: A Machine Learning Approach Using Synthetic Data” was accepted to the 2022 Computing in Cardiology international scientific conference. Co-authors are LLNL’s Mikel Landajuela, Rushil Anirudh, and Robert Blake along with Joe Loscazo from Harvard Medical School. “This dataset will help the cardiac ML community to build better tools for monitoring the heart’s function,” says Landajuela.

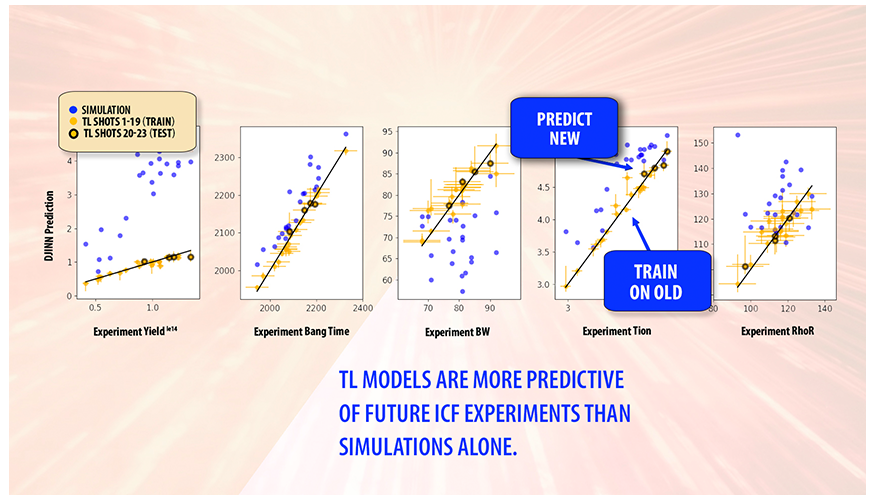

Best Paper Award for ML-Based Approach to ICF Experiments

The IEEE Nuclear and Plasma Sciences Society (NPSS) announced an LLNL team as the winner of its 2022 Transactions on Plasma Science Best Paper Award for their work applying machine learning to inertial confinement fusion (ICF) experiments. In the paper, lead author Kelli Humbird and co-authors propose a novel technique for calibrating ICF experiments by combining ML with experimental data via transfer learning (TL), a method in which models are trained on a task and then partially retrained to solve a separate but related task with limited data.

The team, which includes Luc Peterson and Brian Spears of LLNL and Ryan McClarren from the University of Notre Dame, introduced the concept of hierarchical TL, where neural networks trained on low-fidelity models are calibrated to high-fidelity models and then applied to experimental data. The researchers applied the technique to a database of ICF simulations and experiments carried out at the University of Rochester’s Omega Laser Facility, finding the combination of deep neural networks with experiments resulted in better and more predictive models of ICF experiments than simulations alone.

(Image at left: The figure plots actual values [x-axis] versus predicted values [y-axis] for five measured quantities of interest in Omega ICF experiments—the neutron yield, bang time, burn width, ion temperature, and areal density.)

Panel Discussion Spotlights COVID-19 R&D

The DSI’s career panel series continued on June 28 to highlight some of LLNL’s COVID-19 research projects. Three data scientists—Emilia Grzesiak, Derek Jones, and Priyadip Ray—joined moderator and data scientist Stewart He to talk about their work in drug screening, protein–drug compounds, antibody–antigen sequence analysis, and risk factor identification.

The Lab’s mission and varied scientific portfolio appealed to the panelists during their job searches, and they were able to pivot their research efforts when the pandemic began. They agreed that teaming up with biology and biomedicine colleagues helps them focus their data science efforts in the right direction for a project. Marisa Torres, GS-CAD Bioinformatics group leader and co-organizer of the event, stated, “The panel highlighted their diverse approaches to building new predictive disease capabilities at the Lab and how we can forge our careers to support a greater good, such as with COVID research.”

The career panel series will continue this summer with a session featuring former LLNL interns who now hold full-time positions at the Lab, and a session with additive manufacturing researchers and materials scientists.

Another Successful Year for the Data Science Challenge

This spring and summer, the DSI again teamed up with two University of California (UC) campuses—Merced and Riverside—for the Data Science Challenge (DSC). The intensive two-week program has run for four years with UC Merced and two with UC Riverside, tasking undergraduate and graduate students with addressing a real-world scientific problem using data science techniques. This year, the students worked with a database of virtual molecule screening results, chemical and protein structures, and designed synthetic antibodies to identify drug compounds that can be used to create medicines that prevent and treat COVID-19 infections.

“After two years of having the students work remotely, it was very exciting to see everyone engaged. The students accomplished so much in such a short time,” said UC Merced’s Dr. Suzanne Sindi, who collaborated with LLNL’s DSC director Brian Gallagher. Joining Gallagher as LLNL mentors were Hyojin Kim, Cindy Gonzales, Amar Saini, Mary Silva, and Omar DeGuchy.

UC Riverside professor Vagelis Papalexakis was also pleased with DSC participation. He noted, “This year we were fortunate to host part of the program in person. Students actively collaborated and brainstormed with each other within their own teams and across teams, exchanging cool ideas to try, sharing their experiences in impromptu mini lectures, and providing troubleshooting tips. The challenge problem engaged and excited newcomers to data science as well as experienced PhD students who came up with new research ideas that went beyond standard data science methods. I am looking forward to next year’s iteration!” Learn more about the DSC on the DSI website. (Image at left: Papalexakis [foreground], Gallagher [far right], and UC Riverside students pose for a selfie.)

New Research Presented at Leading ML Conference

LLNL research appeared in multiple workshops as well as a spotlight talk at the International Conference on Machine Learning (ICML), which took place on July 17–23. Jayaraman Thiagarajan, who co-authored most of these papers, states, “Over the last few years, ML researchers at LLNL have made significant progress in characterizing and improving robustness/reliability of deep models in practical applications. It is great to see our efforts recognized by the international ML community.” Available PDF, preprints, and/or open-access articles are linked below.

- Accurate Calibration of Agent-based Epidemiological Models with Neural Network Surrogates – Anirudh, Thiagarajan, Bremer, and Fred Streitz with Los Alamos National Laboratory colleagues

- Data-Efficient Scientific Design Optimization with Neural Network Surrogates – Thiagarajan, Rushil Anirudh, Yamen Mubarka, Irene Kim, Peer-Timo Bremer, Luc Peterson, Brian Spears and former LLNL intern Vivek Narayanaswamy

- Domain Alignment Meets Fully Test-Time Adaptation – Thiagarajan, former LLNL intern Kowshik Thopalli, and an Arizona State University colleague

- Exploring the Design of Adaptation Protocols for Improved Generalization and Machine Learning Safety – Thiagarajan, former LLNL intern Puja Trivedi, and a University of Michigan colleague

- Improved StyleGAN-v2 Based Inversion for Out-of-Distribution Images – Thiagarajan, summer intern Rakshith Subramanyam and Arizona State University colleagues (see image at left and caption below)

- Machine Learning-Powered Mitigation Policy Optimization in Epidemiological Models – Thiagarajan, Anirudh, Bremer, and Fred Streitz with Los Alamos National Laboratory colleagues

- Models Out of Line: A Fourier Lens on Distribution Shift Robustness – Brian Bartoldson, James Diffenderfer, Bhavya Kailkhura, Bremer, and a UC Berkeley colleague

- Revisiting Inlier and Outlier Specification for Improved Out-of-Distribution Detection – Yamen Mubarka, Rushil Anirudh, and Thiagarajan with colleagues from Arizona State University and Microsoft

- Training Calibration-Based Counterfactual Explainers for Deep Learning Models in Medical Image Analysis, published in Nature – Thiagarajan, Thopalli, and Arizona State University colleagues

- Using Direct Error Predictors to Improve Model Safety and Interpretability – Thiagarajan and colleagues from Arizona State University and Microsoft

Image at left: SPHInX is a new inversion technique for using StyleGAN v2 with any out-of-distribution (OOD) data. In addition to handling a wide range of distribution shifts, SPHInX can support accurate reconstruction, semantic editing, and ill-posed tasks such as denoising or super-resolution with OOD images. The top row shows sematic editing of cartoon images (OOD) using a StyleGAN trained only on normal face images. In scientific problems where collecting large datasets is challenging, SPHInX can help repurpose general-purpose generative models (such as in diabetic retinopathy images).

Open-Source Data Science Toolkit for Energy

As the number of smart meters and the demand for energy is expected to increase by 50% by 2050, so will the amount of data those smart meters produce. While energy standards have enabled large-scale data collection and storage, maximizing this data to mitigate costs and consumer demand has been an ongoing focus of energy research. An LLNL team has developed GridDS—an open-source, data-science toolkit for power and data engineers that will provide an integrated energy data storage and augmentation infrastructure, as well as a flexible and comprehensive set of state-of-the-art machine-learning models. By providing an integrative software platform to train and validate machine learning models, GridDS will help improve the efficiency of distributed energy resources, such as smart meters, batteries, and solar photovoltaic units.

“Until now, no open-source platforms have provided data integration or machine learning models. The few existing platforms have been proprietary and not available to the broader research community,” said principal investigator and data scientist Indra Chakraborty at the Laboratory’s Center for Applied Scientific Computing. “As an open-source toolkit, GridDS opens the door to data and power scientists everywhere who are working on these challenges and want to make the most of this data.”

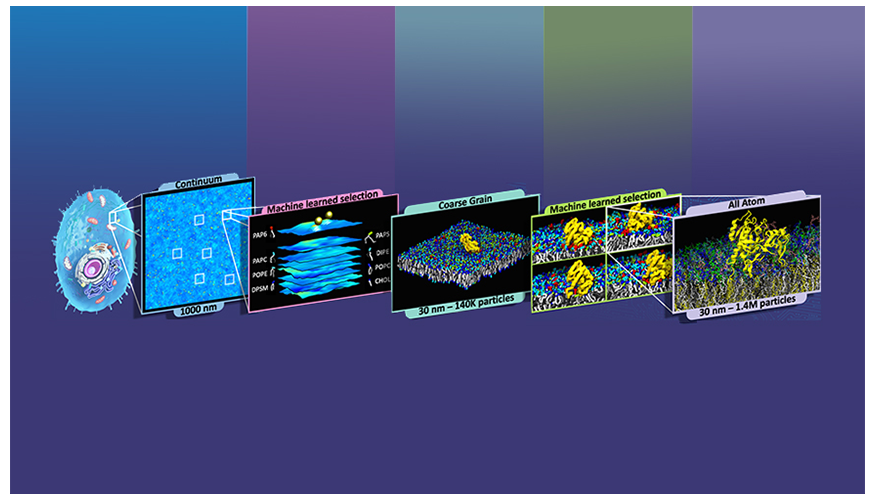

LLNL Cancer Research Goes Exascale

An LLNL team will be among the first researchers to perform work on the world’s first exascale supercomputer—Oak Ridge National Laboratory’s Frontier—when they use the system to model cancer-causing protein mutations. Led by LLNL computer scientist Harsh Bhatia, the team was awarded limited access to Frontier under the DOE's Advanced Scientific Research Center (ASCR) Leadership Computing Challenge (ALCC) program. Over the next year, Bhatia and his team will use the cycles to advance their previous work, applying their Multiscale Machine-Learned Modeling Infrastructure (MuMMI) computing framework and artificial intelligence to model and predict how RAS and RAF proteins interact with each other and with lipids on a realistic cell membrane.

“Cancers are among the top threats to human life, and for cancer research to be among the first few scientific applications on the first exascale machine is both a necessary and a fitting match,” Bhatia said. Computational biologist Helgi Ingólfsson added that the team is excited to expand and demonstrate their MUMMI framework on Frontier. He stated, “Addressing the needs of exascale—scalability and throughput, effective use of heterogeneous resources and AI-driven simulations—are all challenges that will be useful even beyond our current work on cancer research and could translate to other important applications.”

Defending U.S. Critical Infrastructure from Nation-State Cyberattacks

For many years, LLNL has been conducting research on cybersecurity, as well as defending its systems and networks from cyberattacks. As featured in a recent issue of Science & Technology Review, the Lab has developed an array of capabilities to detect and defend against cyberintruders targeting IT networks and worked with government agencies and private-sector partners to share its cybersecurity knowledge to the wider cyberdefense community.

LLNL has developed the immune infrastructure framework to protect critical infrastructure from these national security threats. This framework is comprised of four layers: understanding the systems, keeping the adversary out, detecting and responding to intrusions, and operating through compromise. Development of this approach leverages the Lab’s ability to pull together multidisciplinary expertise in cybersecurity, data science and ML, power-grid engineering, infrastructure, and systems analysis to address this predicament. “Our vision is to bring together equipment vendors and asset owners so they can see how to incorporate immune infrastructure technology and this layered framework in their own devices,” says Nate Gleason, leader of the Lab’s Cyber and Infrastructure Resilience Program. “They will receive firsthand experience incorporating these technologies while we identify the best solutions.”

Virtual Seminar Explores DL Models for Atomic Interactions

The DSI’s July 20 seminar featured Joshua Vita, a PhD student at the University of Illinois Urbana-Champaign. His presentation, “Interpretability in Deep Learning Models for Atomic-Scale Simulations,” discussed research exploring the necessary complexity of DL models for describing atomic interactions, as well as techniques for leveraging generative models for designing more interpretable and transferable potentials. His team is building an open-source database of interatomic potential training data to use in combination with these techniques to begin making fundamental physical insights using a data-driven approach.

Vita holds degrees in Materials Science and Mathematics from the University of Arizona, interned at Sandia National Laboratories developing image analysis software, worked with the OpenKIM/ColabFit team designing a framework for archiving materials data, and developed multiple software packages for fitting interatomic potentials during his graduate research.

Meet an LLNL Data Scientist

Hyojin Kim is a data scientist and ML researcher at LLNL’s Center for Applied Scientific Computing. His research interests in ML and computer vision are recently related to applications for computed tomography, AI-driven drug discovery, scalable and distributed deep learning, and multimodal image analysis. He also has hands-on experience applying GPU computing to challenging problems in these areas. Balancing research and development, as well as learning domain knowledge, are crucial because, Kim says, “I often see data scientists trying to apply a new technique to a particular domain application where it may not be suitable.” This summer, Kim mentored students from two University of California campuses in DSI’s Data Science Challenge to accelerate drug discovery for COVID-19. During the intensive two-week program, he states, “Many of the students I met were enthusiastic, and some of them came up with brilliant ideas that I never thought about before. Students majoring in fields other than computer science are quite knowledgeable in data science, and I actually feel the growing popularity of data science in recent years.” Kim joined LLNL in 2013 after earning his Ph.D. in Computer Science from UC Davis in 2012.