July 12, 2022

Open Data Initiative Adds X-Ray CT Dataset for Additive Manufacturing

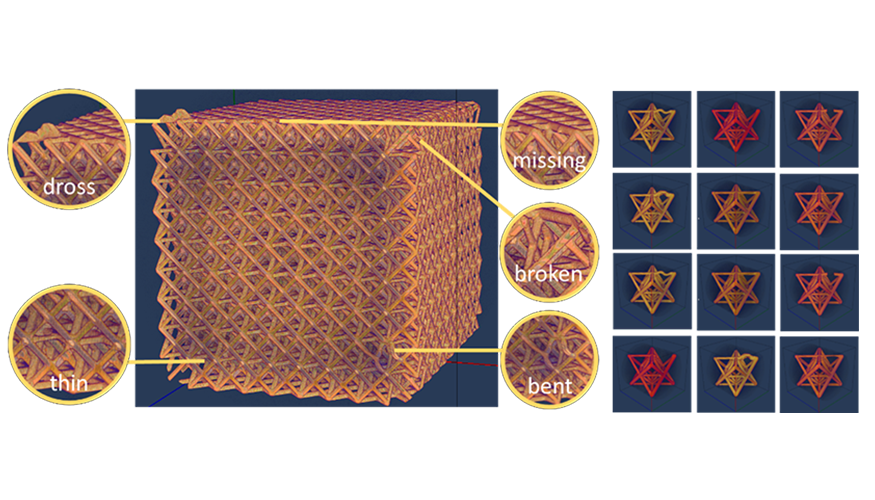

The DSI’s Open Data Initiative (ODI) recently added a new project to its catalog: X-Ray CT Data of Additively Manufactured Octet Lattice Structures. Computed Tomography (CT) is a common imaging modality used at LLNL for non-destructive evaluation in a wide range of applications. For example, CT imaging can highlight defects in additively manufactured (AM) structures, which aids in fine-tuning subsequent iterations of development. This new addition to the ODI catalog consists of seven datasets: simulations containing models of x-ray CT simulations showing AM lattice structures with common defects. Each dataset contains an input file in *.obj format and an output file in *.npy format. Five defects were manually inserted: bent strut, broken strut, missing strut, thin strut, and dross defect. The output files are simulations created using Livermore Tomography Tools (LTT), a software package that includes all aspects of CT modeling, simulation, reconstruction, and analysis algorithms based on the latest research in the field.

Like many ODI datasets, this 4.89-gigabyte CT data is also available in the UC San Diego library’s digital collection. The data was prepared by LLNL researchers Haichao Miao, Andrew Townsend, Kyle Champley, Joseph Tringe, and Peer-Timo Bremer along with University of Utah collaborators Pavol Klacansky, Attila Gyulassy, and Valerio Pascucci. The data was collected during Tringe’s Laboratory Directed Research and Development Strategic Initiative project (20-SI-001). “The defect characterization of AM lattice structures is very challenging due to their high geometric complexity. These simulated CT scans accompanied by computer-aided design models and ground truth information of the defects help with validating novel approaches,” states Miao.

New Research in Tropospheric Climate Variability

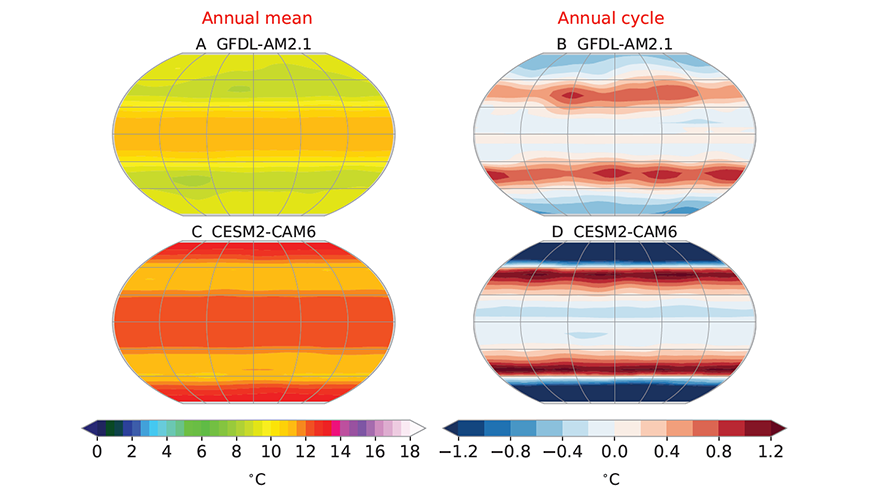

Climate change detection and attribution studies seek to identify human and natural influences on Earth’s climate. Research has found anthropogenic fingerprints (i.e., evidence of climate change caused by human activity) in the troposphere, the first 10 kilometers above the Earth’s surface. In a recent Journal of Climate paper, LLNL researchers investigated whether such fingerprints can be observed in climate model simulations and satellite data of the troposphere’s seasonal cycle changes.

Using five climate models, the team examined large ensembles of mid- to upper tropospheric temperature simulations and compared the data with observed variability spectra. The results are significant: Fingerprints are “robustly identifiable” in 239 of 240 large ensembles, and any influence from real-world multidecadal internal variability can be ruled out because of pattern dissimilarity. “This research confirms the strategic role of large initial condition ensembles (LEs) as a virtual laboratory for testing important hypotheses in climate science. Using diverse LEs, we sampled a wide range of equilibrium climate sensitivity, multi-decadal internal variability sequences, and anthropogenic aerosol forcing, and we still obtained a remarkably robust detection of model seasonal cycle fingerprints throughout our sampling space,” says co-author and LLNL climate scientist Giuliana Pallotta.

Other LLNL co-authors are Stephen Po-Chedley, Céline Bonfils, Mark Zelinka, and retired climate scientist Benjamin Santer. Collaborators hail from multiple universities and international organizations. (Image at left: Tropospheric temperature changes in simulations of an aquaplanet, an idealized, completely water-covered Earth. This type of modeling acts as a control for the large ensemble data.)

WiDS Workshop Features LLNL Data Scientist

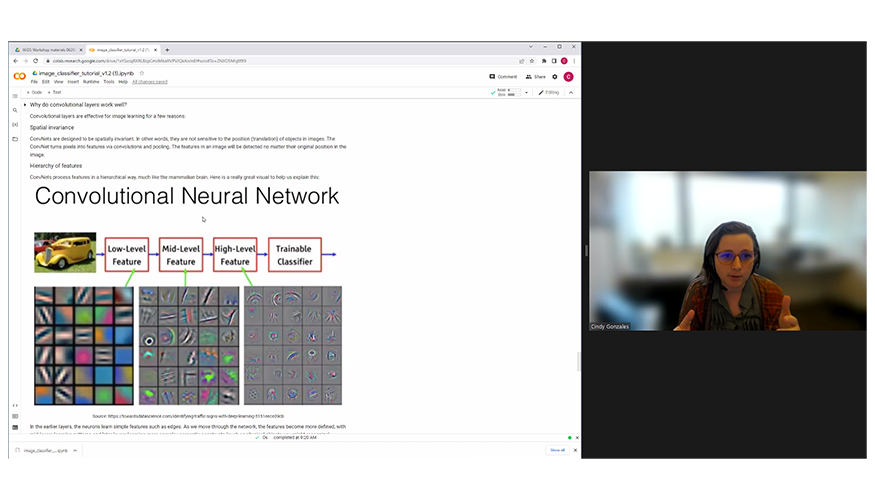

In addition to its annual conference held every March, the global Women in Data Science (WiDS) organization hosts workshops and other activities year-round to inspire and educate data scientists worldwide, regardless of gender, and to support women in the field. On June 29, LLNL’s Cindy Gonzales led a WiDS Workshop titled “Introduction to Deep Learning for Image Classification.” She specializes in object detection in unconventional types of imagery (e.g., radar, overhead) for LLNL’s Global Security Computing Applications Division.

Deep learning (DL) is an effective method for modeling image classification. For example, Gonzales described the steps of processing raw images as an array or tensor, which is then run through a DL model. The model can be trained to make a decision based on probabilities (i.e., a label for what the image shows). A more complex process can include backpropagation and gradient descent to update the model with each iteration.

Gonzales laid the foundation to understand the workflow of training a model using a Jupyter Notebook, then demonstrated this workflow: load an image dataset, build the convolutional neural network, create an objective function and optimizer, train the image classifier in PyTorch, and evaluate the results. Throughout the presentation, she explained different types of neural networks, layers within those networks, image color bands, logistic regression, and other concepts for attendees who were new to DL and image classification.

“I am very humbled to have been selected as a presenter for the WiDS Workshops. Hosting workshops for those in different domains of data science to come together and learn about a new topic is a great way to pass along knowledge and meet others in the data science field.” Gonzales said. Watch the YouTube recording of her presentation, and learn more about LLNL’s involvement in WiDS.

Assured and Robust…or Bust

The consequences of a machine learning (ML) error that presents irrelevant advertisements to a group of social media users may seem relatively minor. However, this opacity, combined with the fact that ML systems are nascent and imperfect, makes trusting their accuracy difficult in mission-critical situations, such as recognizing life-or-death risks to military personnel or advancing materials science for the Lab’s stockpile stewardship mission, inertial confinement fusion experiments, radiation detectors, and advanced sensors. While opacity remains a challenge, LLNL’s ML experts aim to provide assurances on performance and enable trust in ML technology through innovative validation and verification techniques.

The latest issue of the Lab’s Science & Technology Review magazine highlights this research. “The lack of trust and transparency in current ML algorithms prevents more widespread use in important applications such as robotic autonomous systems, in which artificial intelligence algorithms enable networks to gather information and make decisions in dynamic environments without human intervention,” says LLNL computational engineer Ryan Goldhahn, who is featured in the article alongside Bhavya Kailkhura, James Diffenderfer, and Brian Bartoldson. “To be truly useful, ML algorithms must be robust, reliable, and able to run on small, low-power hardware that can perform dependably in the field.”

Webinar Highlights ATOM Consortium Progress

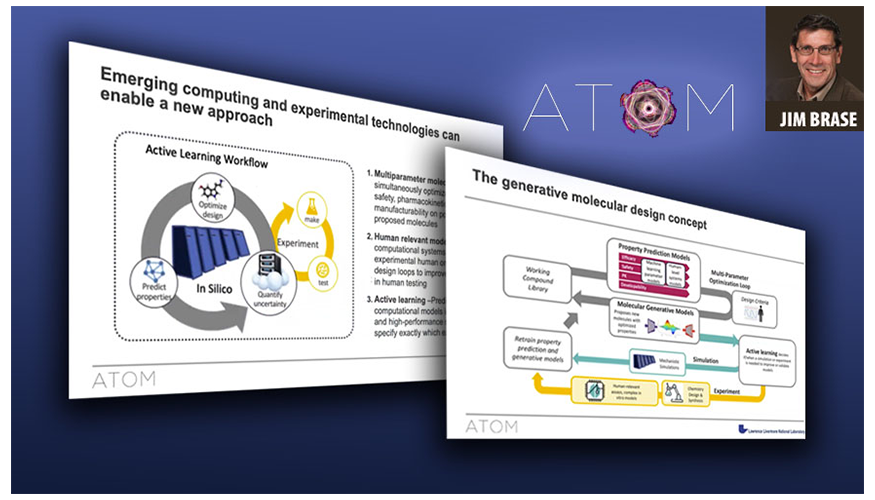

The private-public Accelerating Therapeutic Opportunities in Medicine (ATOM) consortium is showing “significant” progress in demonstrating that high-performance computing (HPC) and ML tools can speed up the drug discovery process, said Jim Brase, ATOM co-lead and LLNL’s deputy associate director for data science. The consortium currently boasts more than a dozen member organizations, including national laboratories, private industry, and universities.

ATOM sponsored Brase’s May 18 webinar, during which he discussed ATOM’s approach to multiparameter molecular design—an ML-backed generative loop that predicts properties of proposed drug molecules; screens them virtually for safety, pharmacokinetics, manufacturability, and efficacy; optimizes the designs; and uses computational models and experimental feedback from the synthesized compounds to improve the models. In addition to providing an overview of ATOM and its accomplishments to date, Brase also presented the technology used in the drug design loop, including the ATOM Modeling PipeLine (AMPL) framework, which he said has proven useful for integrating multiple data sets and training large populations of models. A full recap of the webinar is available at LLNL News.

Ribbon-Cutting for New Computing Facility Upgrades

On June 15, DOE Under Secretary for Nuclear Security and Administrator for the National Nuclear Security Administration (NNSA) Jill Hruby dedicated two critical infrastructure projects at LLNL. She attended ribbon-cutting ceremonies for the Exascale Computing Facility Modernization (ECFM) project and LLNL’s new Emergency Operations Center, both of which provide essential upgrades to Lab capabilities.

The utility-grade ECFM infrastructure project massively upgraded the power and water-cooling capacity of the adjacent Livermore Computing Center, preparing it to house next generation exascale-class supercomputers for NNSA, including the forthcoming El Capitan machine. Such computing systems will allow NNSA and LLNL’s Advanced Simulation and Computing program to meet the certification requirements of the Stockpile Stewardship Program and aid in future design efforts.

Hruby said, “Given the dependence of the NNSA mission on high performance computing (HPC), ECFM is a visible sign to the rest of the world of NNSA’s capability and intent to retain global leadership in HPC, which in turn contributes to our nation’s deterrent.” Hruby and other speakers acknowledged ECFM Project Manager Anna Maria Bailey and the teams involved in the endeavor from NNSA, the Livermore Field Office, and engineering and construction crews, crediting them for their ability to deliver on the project nine months ahead of schedule and $9 million under budget, pandemic-related delays notwithstanding.

Virtual Seminar Explores Differential Equations in Physics Modeling

The DSI’s June 27 seminar featured University of Florida assistant professor—and former LLNL intern—Dr. James Fairbanks, who presented “Diagrammatic Differential Equations in Physics Modeling and Simulation.” He described results from a recent paper on applying categories of diagrams for specifying multiphysics models for partial differential equation–based simulations. He also discussed the graphical formalism based on category theoretic diagrams and some applications to heat transfer, electromagnetism, and fluid dynamics.

Dr. Fairbanks studies mathematical modeling and scientific computing through the lens of abstract algebra and combinatorics and leads the AlgebraicJulia.org project. He has won both the Defense Advanced Research Projects Agency (DARPA) Young Faculty and Director’s awards supporting his work on applied category theory and scientific computing. Prior to joining the University of Florida, Dr. Fairbanks was a Senior Research Engineer at the Georgia Tech Research Institute, where he ran a portfolio of DARPA- and Office of Naval Research–sponsored research programs. A recording of the talk will be posted to the seminar series’ YouTube playlist.