April 29, 2022

Open Data Initiative Adds Neuroimaging Dataset

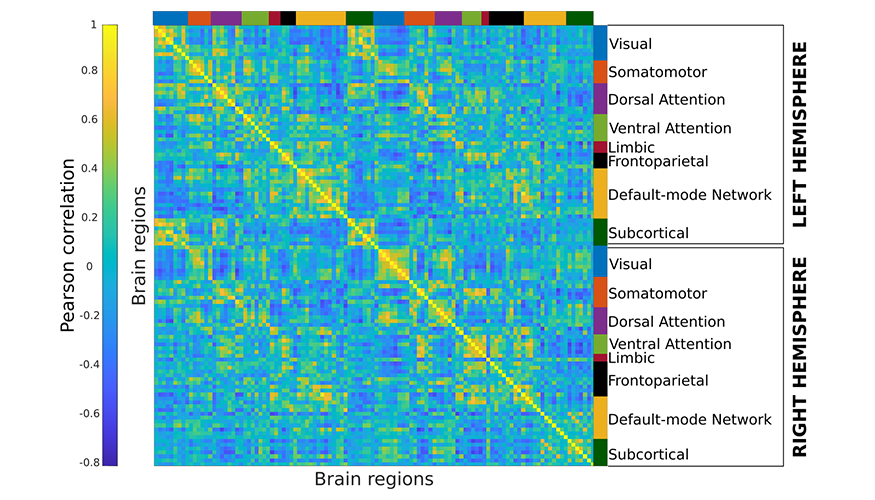

The DSI’s Open Data Initiative recently added a new project to its catalog: Derived Products from HCP-YA fMRI. The Human Connectome Project–Young Adult (HCP-YA) dataset includes multiple neuroimaging modalities from 1,200 healthy young adults. These modalities include functional magnetic resonance imaging (fMRI), which measures the blood oxygenation fluctuations that occur with brain activity.

The fMRI data were recorded in multiple sessions per subject: during rest and a set of tasks, designed to evoke specific brain activity. Each fMRI run is a sequence of 3D volumes, and processing these large collections of data is computationally expensive. LLNL researchers have processed these time-series and generated the hierarchically parcellated connectomes using high-performance computing resources. Using these data, LLNL and Purdue University researchers have assessed the “brain fingerprint” gradients in young adults by developing an extension of the differential identifiability framework.

The work by Uttara Tipnis, Elizabeth Tran, and Alan Kaplan—along with collaborators from Purdue University, the University of Pennsylvania, the University of Geneva, École Polytechnique Fédérale de Lausanne—is available online.

LLNL Data Scientist Mentors Brigham Young University Students

In October 2021, LLNL data scientist Cindy Gonzales began mentoring Brigham Young University (BYU) undergraduates enrolled in a Data Science Capstone course. The two-semester course is designed for juniors and seniors seeking a data science emphasis in their computer science major. With Gonzales’s guidance, the students created a framework to perform automatic seizure detection from electroencephalogram (EEG) data. They successfully created an autoencoder model with a classification head that predicted seizures with 94% accuracy on the test data.

Although she hadn’t mentored students before, Gonzales submitted a project proposal to BYU in September. She chose the CHB-MIT (Children’s Hospital Boston and Massachusetts Institute of Technology) dataset of pediatric seizure patients’ EEG signals because of her son’s seizure disorder, which began presenting at just eight months old. “Getting him diagnosed was difficult,” Gonzales recalls. “At first, doctors didn’t find any unusual activity in his EEG and therefore didn’t prescribe medications.” When her son was a year old, the family ended up in the emergency room after he suffered a sustained seizure. Finally, after further EEG testing, he received appropriate medical treatment.

Gonzales continues, “The neurologist and epileptologist mentioned that an estimated 30% of patients with abnormal brain activity don’t actually have an abnormal EEG during their first monitoring session. I kept thinking there must be a better, more automated approach to detecting seizures and abnormal brain activity.”

The BYU students connected with Gonzales’s passion for the project. She states, “All three were highly motivated and eager to produce a solution. Some had personal experiences with epilepsy and other seizure disorders.” Gonzales guided them through eight weeks of deep learning techniques, then the students decided to implement—and improve—a published solution. The team also met a neuroscience researcher with experience as an EEG technician.

“The students blossomed and exceeded my expectations. The excitement they felt as they tried new things energized me and inspired me to bring my best to work every day,” Gonzales notes. This month, the students presented their work to peers, mentors, and a panel of judges. Gonzales’s group received third place in the rankings out of 20 student groups, winning them a cash prize. They also released their code on GitHub (LLNL-Seizure-Detection-Capstone) and documented their work in a paper to submit to a workshop. (Image, left to right: BYU student Sara Berrios, Gonzales, student Jess Hamblin, student Benjamin Bischoff, and professor/advisor Christophe Giraud-Carrier.)

DOE Data Days Are Back

The Department of Energy’s Data Days workshop—also known as D3—returns on June 1–3 (Wednesday through Friday). LLNL is hosting the hybrid event, which will run from 8:00 a.m. to 3:00 p.m. Pacific Time on the first two days and a half day on that Friday. All DOE-affiliated staff are welcome to attend either onsite at LLNL or via WebEx. Registration will open on May 2.

The event’s presentations and activities will focus on four topics in the context of DOE missions and the national labs:

- Data intensive computing

- Cloud and hybrid data management

- Data access, sharing, and sensitivity

- Data policy and ethics

D3 launched in 2019 (see photo at left). This year’s LLNL organizers are Rebecca Rodd, Daniel Gardner, and Amanda Price. Other co-organizers hail from Argonne, Brookhaven, Idaho, Los Alamos, Oak Ridge, Pacific Northwest, and Sandia national laboratories; as well as the National Energy Technology Laboratory and the National Renewable Energy Laboratory.

New Research in Multispectral Imaging Performance

Spectral imaging, which captures wavelength bands in the electromagnetic spectrum, is useful for identifying a material’s “fingerprint.” Designs of remote sensing systems, such as those aboard satellites, often use multiple spectral bands (multispectral imaging). Previously, selecting the multispectral band passes has been as much as an art form as it is a science. A recent strategy for finding optimal multispectral band passes is to use a hyperspectral image (>20 contiguous spectral bands) as a reference and use an optimization algorithm that selects a lower number of bands from the hyperspectral image to simulate a multispectral imager. The optimization objective drives band selection such that material class separability is maximized.

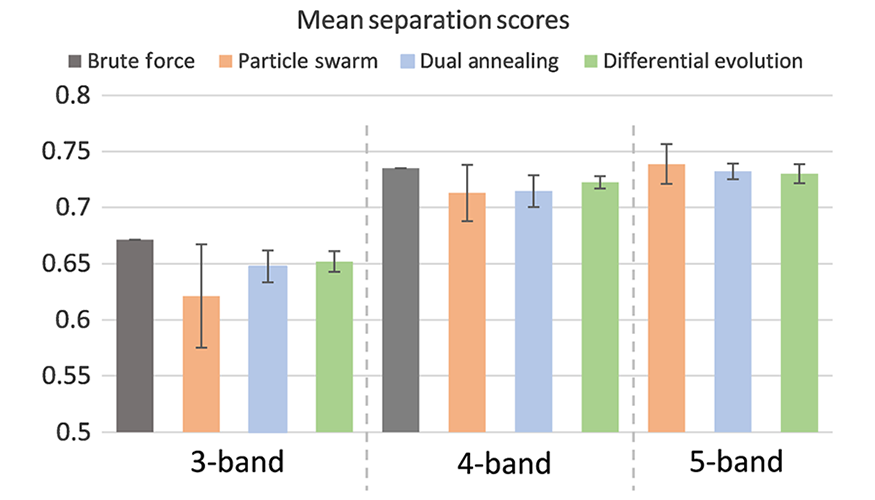

LLNL researchers Michael Zelinski, Andrew Mastin, Vic Castillo, and Brian Yoxall have authored a new paper in the Journal of Applied Remote Sensing. The team evaluated the performance of three numerical optimization algorithms—particle swarm, dual annealing, and differential evolution—against a brute force computational search of all possible spectral band combinations. Target materials were calcite, gypsum, and limestone. Results showed that the three algorithms perform similarly, though dual annealing performed the best (i.e., highest and most consistent separation scores). (Image at left: Comparison of mean separation scores for the three optimization algorithms and brute force approach.)

Special Recognition

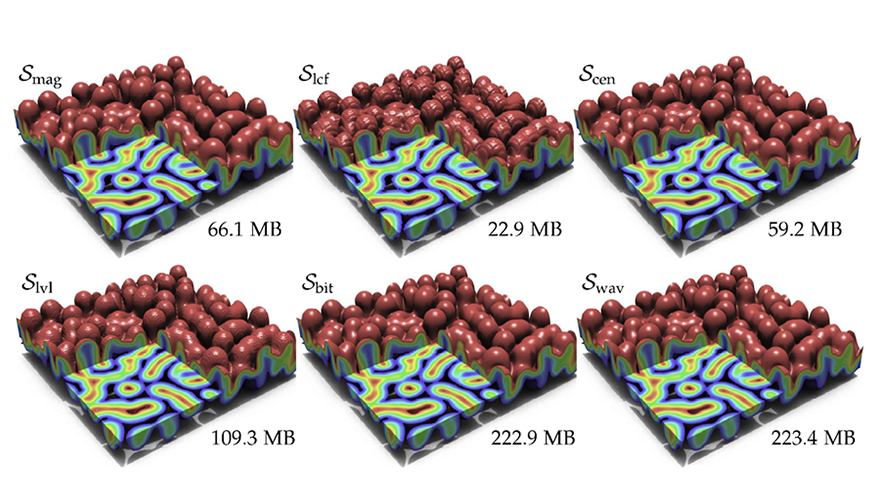

Three LLNL computer scientists and University of Utah colleagues have won the 2022 PacificVis Best Paper award. Harsh Bhatia, Peer-Timo Bremer, and Peter Lindstrom co-authored “AMM: Adaptive Multilinear Meshes,” which introduces a resolution-precision-adaptive representation for volumetric data. A future edition of the DSI newsletter will feature more on this award. (Image at left: Visual comparison of reconstructed data with approximate memory footprint of the respective meshes.)

LLNL researchers Abdul Awwal and Michael Zelinski served as guest editors of a special edition of Optica on “Artificial Intelligence and Machine Learning in Optical Information Processing.” Volume 61, issue 7 of the journal was published in March and includes topics such as image recognition, signal and image processing, machine inspection/vision, optical sensing, and interferometry.

Virtual Seminar Demonstrates Universal Law of Robustness

The DSI’s March 29 seminar featured Stanford University PhD student Mark Sellke, who presented “Universal Law of Robustness via Isoperimetry.” A puzzling phenomenon in deep learning is that models are trained with many more parameters than what this classical theory would suggest. In his talk, Sellke proposed a theoretical explanation for this phenomenon and showed that for a broad class of data distributions and model classes, over-parametrization is necessary if one wants to interpolate the data smoothly. A universal law of robustness applies to any smoothly parametrized function class with polynomial size weights, and any covariate distribution verifying isoperimetry. Sellke also gave an interpretation of his team’s result as an improved generalization bound for model classes consisting of smooth functions.

Sellke graduated from MIT in 2017 and received a Master of Advanced Study with distinction from the University of Cambridge in 2018, both in mathematics. Sellke received the best paper and best student paper awards at SODA 2020 and the outstanding paper award at NeurIPS 2021. He has broad research interests in probability, statistics, optimization, and machine learning. A recording of Sellke’s talk will be posted to the seminar series’ YouTube playlist.

Meet an LLNL Data Scientist

With a PhD in Mathematics from the University of Illinois at Urbana-Champaign, Sarah Mackay enjoys using mathematical techniques to make inferences about real-world systems. She draws on her experience in combinatorial optimization, network science, and statistics to perform risk analyses for LLNL’s Cyber and Infrastructure Resilience program. Mackay designs and implements algorithms to secure infrastructure such as power grids, gas pipelines, and communication systems. “This work involves making assumptions about the structure of the system we’re studying. It can be challenging to know if the assumptions are valid and, thus, if we can trust our conclusions,” she explains. Mackay, who also coordinates the DSI’s virtual seminar series, thrives in the Lab’s culture of interdisciplinary teamwork. She states, “The set of problems one can tackle becomes so much larger when the pool of expertise grows.”

Data Science Challenge Kicks Off Soon

For the fourth consecutive year, the DSI is teaming up with the University of California’s Merced campus to offer an intensive Data Science Challenge (DSC) internship in May. For three weeks, students will work on an important data science problem while learning from experts, networking with peers, and developing skills for future internships. This year’s DSC focuses on drug discovery for COVID-19. Students will work with a massive database of virtual molecule screening results, chemical and protein structures, and designed synthetic antibodies to identify drug compounds that can be used to create medicines that prevent and treat COVID-19 infections. Additionally, building off last year’s success, the second year of the DSC with UC Riverside students will begin in June. Led by Brian Gallagher, the 2022 DSC mentors are Hyojin Kim, Ryan Dana, and Cindy Gonzales.