Nov. 10, 2021

Counterfactual Generators for Deep Models

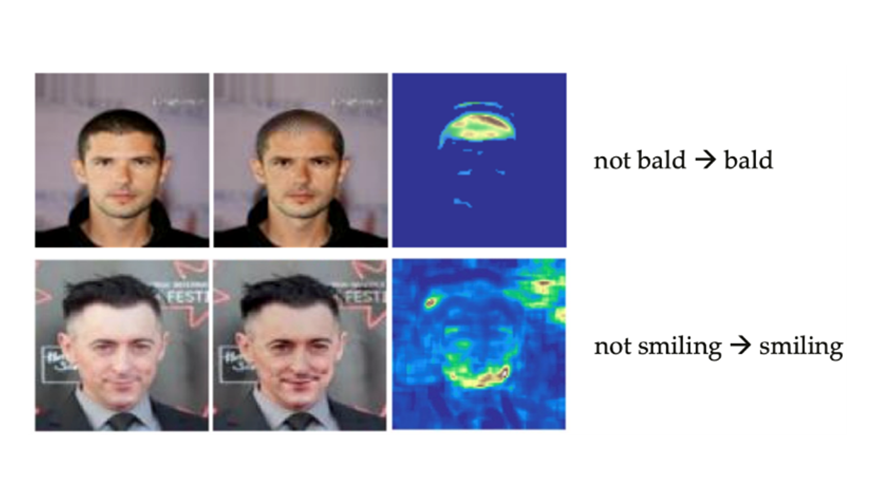

LLNL’s research into machine learning (ML) interpretability continues with an investigation of counterfactual explanations—those that synthesize a hypothetical result based on small, interpretable changes to a given query image. Existing approaches rely extensively on pre-trained generative models or access to training data to create plausible counterfactuals that support users’ hypotheses.

LLNL’s Jayaraman Thiagarajan and colleagues from Arizona State University, Stanford University, and IBM Research have developed a technique called DISC—Deep Inversion for Synthesizing Counterfactuals—that enables creation of counterfactuals for ML models without having access to source data or pre-trained models. The team’s paper (preprint), titled “Designing Counterfactual Generators using Deep Model Inversion,” is one of multiple LLNL papers accepted to the upcoming Conference on Neural Information Processing Systems (NeurIPS).

DISC uses a combination of strong image priors (i.e., the structure of neural network itself characterizes the image space), uncertainty estimates, and a novel optimization strategy. The team’s technique effectively explains decision boundaries and is robust to distribution shifts between training and test data. DISC can be effectively deployed even in scenarios with data privacy requirements, such as healthcare, and is a valuable tool for introspecting complex interactions between different data characteristics and detecting shortcuts in AI models. (Image at left: Without requiring any access to data or pre-trained generative models, DISC performs inversion of a deep neural network to synthesize counterfactual explanations.)

Data Science Challenge Welcomes UC Riverside

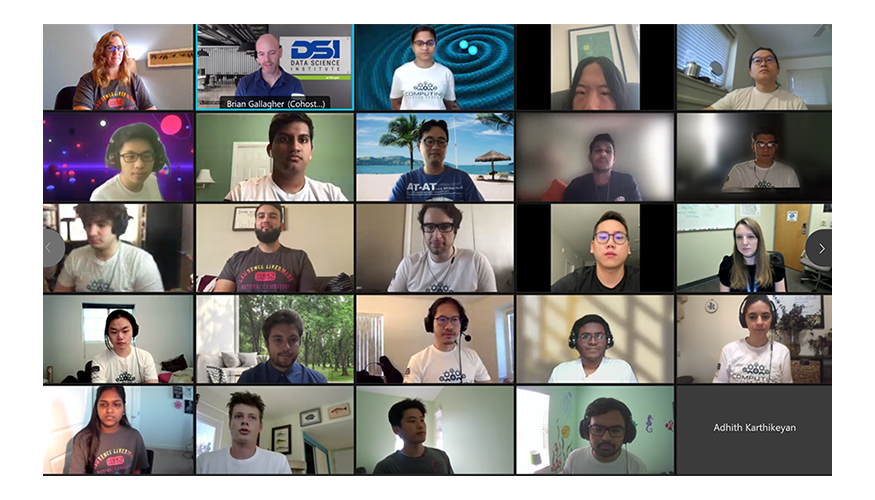

Together with LLNL’s Center for Applied Scientific Computing, the DSI welcomed a new academic partner to the 2021 Data Science Challenge (DSC) internship program: the University of California (UC) Riverside campus. The intensive program has run for three years with UC Merced, and it tasks undergraduate and graduate students with addressing a real-world scientific problem using data science techniques such as ML and statistical analysis.

This year’s Challenge focused on detecting near-Earth objects, such as asteroids, that could threaten global security. UC Riverside’s DSC followed the same three-week virtual format as with UC Merced’s DSC earlier in the summer, including technical seminars, virtual tours of Lab facilities, one-on-one mentoring, and team-based activities.

DSC director Brian Gallagher joined forces with UC Riverside associate professor Vagelis Papalexakis to guide the 23 students from August 30 to September 17. The two had met at data science conferences and via overlapping collaborations. “When Brian reached out to me about organizing the Challenge with UC Riverside, I was happy to get on board,” Papalexakis said. “It was the perfect mix of working on an interesting project with him while building a program in the service of our students.”

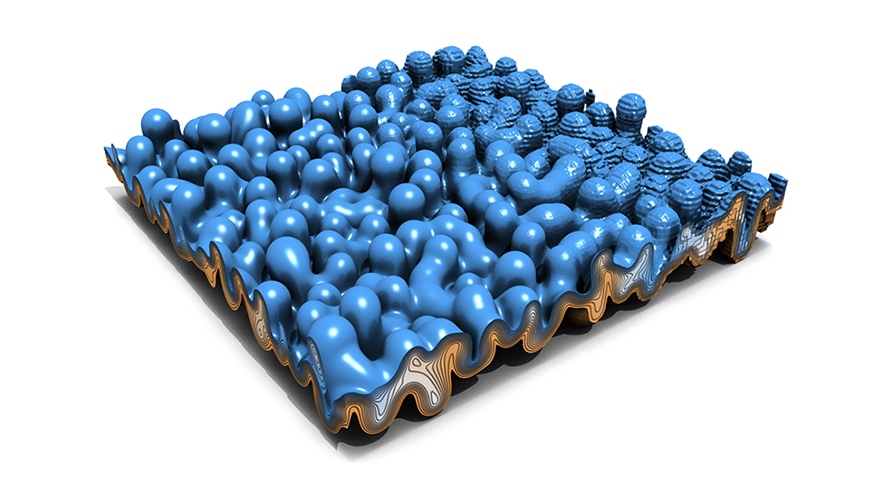

Stockpile Stewardship Milestone Reached with Data Science & HPC

The Primary Machine Learning project (PriMaL) in LLNL’s Weapon Physics and Design (WPD) Certification Methodologies group successfully completed an L2 Milestone entitled Application of Machine Learning to Heterogeneous Hydrodynamic Experimental Data. This milestone demonstrated an early use of an autoencoder-based capability to employ a greater subset of both scalar and image hydrodynamic test data per experiment than previously utilized to constrain nuclear performance parameters. This approach built on methods developed through the Inertial Confinement Fusion Machine Learning Strategic Initiative and earlier milestones by the Boost Science group in WPD. The team ran tens of thousands of calculations on both LLNL and Los Alamos National Lab high-performance computing hardware (such as the Magma supercomputer, shown at left) and further matured large-scale workflow methods, deep neural networks, Markov chain Monte Carlo statistical tools, and experimental analysis pipelines. This work will continue in the coming year as the team pursues further verification and validation, refines analysis methods, and extends the approach to time-series data and additional experiments.

DOE Funds Project for Data Reduction in Scientific Applications

LLNL is among five national labs to receive Department of Energy (DOE) awards for research aimed at shrinking the amount of data needed to advance scientific discovery. A total of $13.7 million is allocated for data reduction in scientific applications, where massive datasets produced by high-fidelity simulations and upgraded supercomputers are beginning to exceed scientists’ abilities to effectively collect, store, and analyze them.

Under the project ComPRESS (Compression and Progressive Retrieval for Exascale Simulations and Sensors), LLNL scientists will seek better understanding of data-compression errors, develop models to increase trust in data compression for science, and design a new hardware and software framework to drastically improve performance of compressed data. The work will build on zfp, a Lab-developed, open-source, versatile high-speed data compressor capable of dramatically reducing the amount of data for storage or transfer.

Virtual Seminar Focuses on Uncertainty Visualization

For the DSI’s September 15 virtual seminar, Professor Matthew Kay of Northwestern University presented “A Biased Tour of the Uncertainty Visualization Zoo.” He discussed ways to combine knowledge of visualization perception, uncertainty cognition, and task requirements to design visualizations that more effectively communicate uncertainty. His team works to systematically characterize the space of uncertainty visualization designs and develop ways to communicate uncertainty in the data analysis process. Kay’s current research is funded by multiple National Science Foundation awards, and he has received multiple best paper awards across human-computer interaction and information visualization venues. A recording of his talk will be posted to the seminar series’ YouTube playlist.

Recent Research

Accepted at the 2021 International Conference on Computer Vision (ICCV):

- Can shape structure features improve model robustness under diverse adversarial settings? – Bhavya Kailkhura with colleagues from Arizona State University; Carnegie Mellon University; University of California, San Diego; University of Illinois, Urbana-Champaign; University of Michigan, Ann Arbor; Nanyang Technological University; and NVIDIA

Accepted at the 2021 Conference on Neural Information Processing Systems (NeurIPS):

- Designing counterfactual generators using deep model inversion – Jayaraman Thiagarajan with colleagues from Arizona State University, Stanford University, and IBM Research

From LLNL’s physics-informed data-driven physical simulation team:

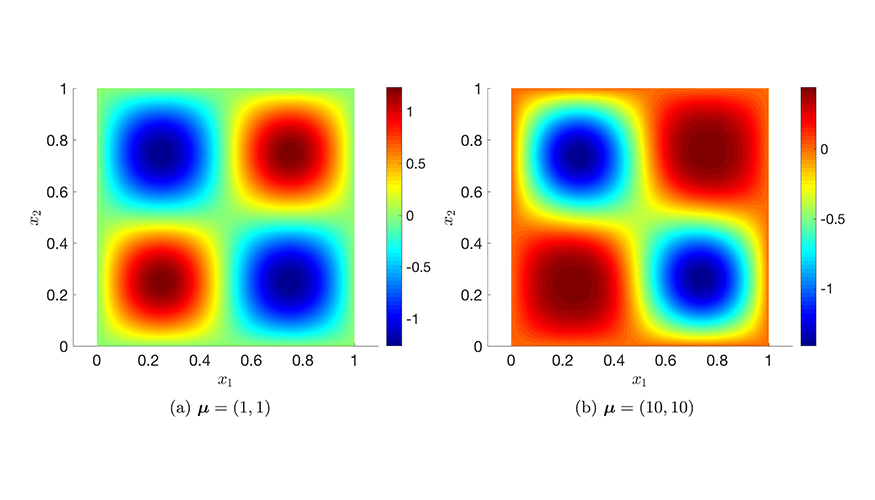

- Domain-decomposition least-squares Petrov-Galerkin (DD-LSPG) nonlinear model reduction, Computer Methods in Applied Mechanics and Engineering – Youngsoo Choi with colleagues from Sandia National Labs and University of Washington (Image at left: Full-order model solutions for finite element discretizations used in research on model-reduction methods.)

- A framework for data-driven solution and parameter estimation of PDEs using conditional generative adversarial networks (preprint) – Youngsoo Choi with colleagues from Cornell University, Los Alamos National Lab, and University of Hawaii

- Non-intrusive reduced order modeling of natural convection in porous media using convolutional autoencoders: comparison with linear subspace techniques (preprint) – Youngsoo Choi with colleagues from Cornell University, Los Alamos and Sandia National Labs, and Catholic University of the Sacred Heart

Meet an LLNL Data Scientist

As an applied statistician who enjoys tackling interesting problems, Kathleen Schmidt is never bored. “Nearly every field with a quantitative question can benefit from a statistician, so we get to explore a wide variety of science applications,” she says. Schmidt works primarily on two projects: one with messy physics reaction history data collected from older technology, and another where statistical modeling helps optimize materials strength experiments. Her recent publications include modeling for radiation source localization and material behavior in extreme conditions. During 2019–2021, Schmidt served as technical coordinator for the DSI’s seminars and transitioned the series to a virtual format in 2020. Schmidt earned a PhD in Applied Mathematics from North Carolina State University before joining the Lab as a postdoctoral researcher in 2016 and converting to full-time staff in 2018.