Oct. 26, 2022

Leadership Changes with New Fiscal Year

Coinciding with LLNL’s new fiscal year (FY23) beginning on October 1, a few personnel changes took effect for the DSI and Data Science Summer Institute (DSSI). Dan Merl, who leads the Machine Intelligence Group in LLNL’s Center for Applied Scientific Computing, joined the DSI Council to advise on computing and data initiatives. Goran Konjevod, from LLNL’s Computational Engineering Division, moved from his DSSI directorship to the Council to further promote education and workforce initiatives. Statistician Amanda Muyskens joined Nisha Mulakken in co-directing the DSSI. (Read more about Muyskens below.)

DSI director Michael Goldman states, “I would like to thank Goran and Dan Faissol for their respective commitments to the DSSI and Council. Their input during the DSI’s formative years has been extremely valuable. All of our activities require a team effort, and the future is sure to be exciting and productive with our remarkable team.”

(Image at left, top row: Council members Peer-Timo Bremer, Barry Chen, Goran Konjevod, and Michael Schneider. Bottom row: Council members Ana Kupresanin and Dan Merl; DSSI co-directors Nisha Mulakken and Amanda Muyskens.)

Explainable AI for Materials Science

Machine learning (ML) models are increasingly used in materials studies because of their flexibility and prediction accuracy. For instance, ML models have successfully predicted material and atomic properties at different scales. However, the most accurate ML models are usually difficult to explain. This lack of explainability has restrained the usability of ML models in scientific tasks, and many materials scientists find black-box ML models difficult to trust. Remedies to this problem lie in explainable artificial intelligence (XAI), an emerging research field that addresses the explainability of complicated ML models like deep neural networks (DNNs). A new Computational Materials paper provides an entry point to XAI for materials scientists.

Explainability involves defining how a model processes and represents data and ensuring that all of its components are understandable. The authors note that there is usually a tradeoff between an ML model’s complexity and explainability. While high complexity is usually necessary for ML models to achieve high accuracy on difficult problems, the same complexity also poses challenges in explainability. Using examples of DNNs and other types of models in real-world materials science applications, the research team walks readers through XAI concepts and techniques as well as model explanation methods (e.g., heat maps that can diagnose a model’s mistakes).

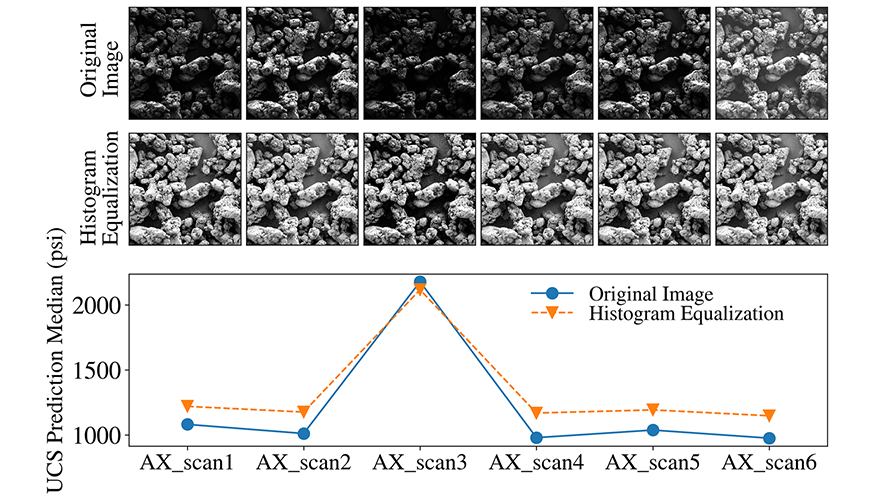

Co-authors are Xiaoting Zhong (now of Lam Research) and LLNL researchers Brian Gallagher, Shusen Liu, Bhavya Kailkhura, Anna Hiszpanski, and Yong Han. (Image at left shows an example of a data distribution shift resulting from ML model mistakes. The task was to predict mechanical strength in feedstock materials from scanning electron microscopy images at different microscope settings. ML model predictions should only depend on the microstructure content, not the microscope settings. However, results showed that darker images were predicted to have bigger strength values, even with image normalization.)

Career Panel Showcases Advanced Manufacturing and Materials

The DSI wrapped up the 2022 career panel series on October 18 with a focus on advanced manufacturing and materials science. Moderated by advanced manufacturing researcher Brian Giera, the panelists were materials research engineer Dominique Porcincula, materials scientist Becca Walton, and staff scientist Nick Calta (clockwise from top right in this image). The panel discussed their research projects—process-scale digital twins, process monitoring for metal additive manufacturing (AM), ultraviolet-assisted direct ink writing, and AM of scintillators—and shared stories about how they became interested in the field; what excites them about the technologies they work with and the problems they’re solving; and how students and early career staff can gain relevant experience.

Although AM and related research often generate large datasets, materials scientists and engineers don’t necessarily have a data science background. “You can overcome deficits in your education by building a network, working with interdisciplinary teams, and having a willingness to learn—to find out what you don’t know and get those answers,” Porcincula pointed out. Giera added, “When you combine a semi-mature field like AM with a hot new field like data science, you can come up with some really interesting paths.”

Data Compression Collaboration with the University of Utah

The University of Utah has announced the creation of a new oneAPI Center of Excellence focused on developing portable, scalable, and performant data compression techniques. The oneAPI Center will be headed out of the University of Utah’s Center for Extreme Data Management Analysis and Visualization (CEDMAV) and will involve the cooperation of LLNL’s Center for Applied Scientific Computing (CASC). The Center aims to accelerate ZFP compression software using oneAPI’s open, standards-based programming on multiple architectures to advance exascale computing. With the ZFP development team at LLNL, the oneAPI Center of Excellence will develop a SYCL-based portable, scalable, and performant ZFP backend that runs on accelerator architectures across different vendors.

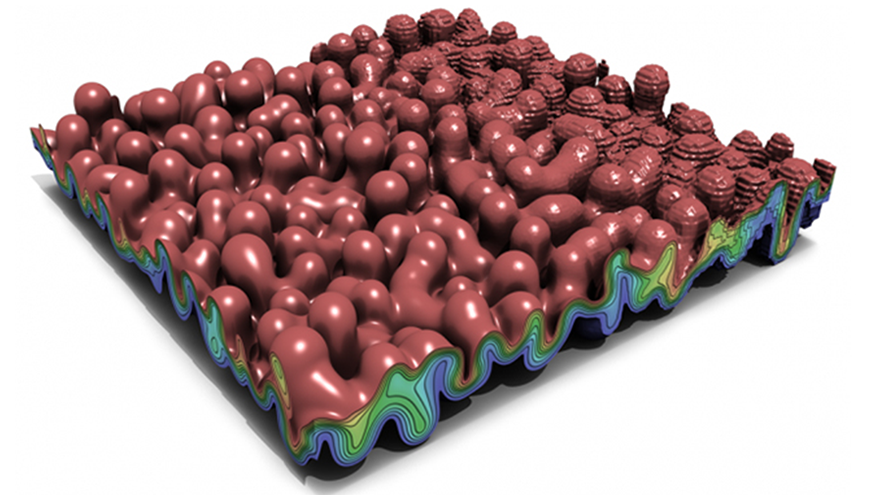

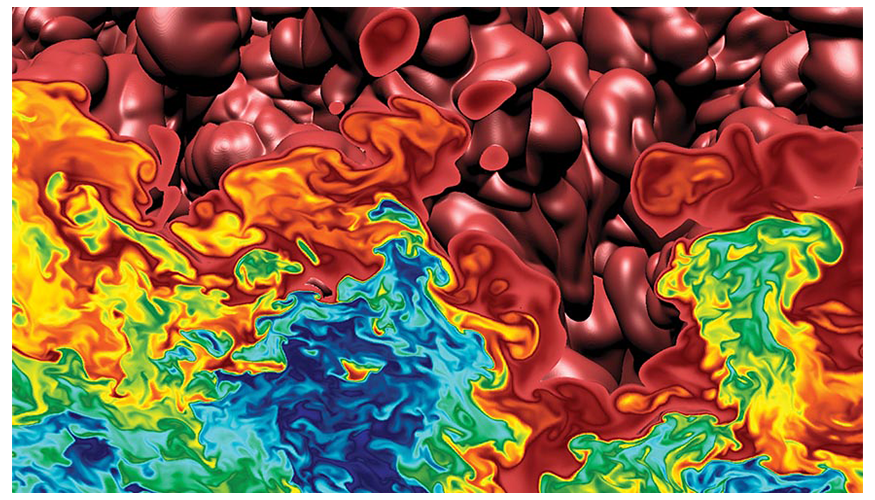

Developed by LLNL, ZFP is state-of-the-art software for lossless and error-controlled lossy compression of floating-point data that is becoming a de facto standard in the high-performance computing (HPC) community, with numerous science and engineering applications and users. ZFP (de)compression is particularly amenable to data-parallel execution through its decomposition into small, independent data blocks, and parallel backends have been developed for OpenMP, CUDA, and HIP programming models. ZFP project lead Peter Lindstrom says, “I am excited about this opportunity with our long-standing collaborators at the University of Utah to extend the capabilities of our ZFP compressor to run efficiently on next-generation supercomputers.” (Image at left: This illustration shows ZFP’s ability to vary the compression ratio to any desired setting, from 10:1 on the left to 250:1 on the right, where the partitioning of the data set into small blocks is evident.)

Conference Roundup

LLNL data science research has been accepted at several fall and winter conferences. Preprints are linked below where available.

September: Advanced Maui Optical and Space Surveillance Technologies (AMOS). AMOS is the premier technical conference in the nation devoted to space situational awareness.

- Light Curve Completion and Forecasting using Fast and Scalable Gaussian Processes (MuyGPs) – Imene Goumiri, Alec Dunton, Amanda Muyskens, Benjamin Priest, Robert Armstrong. Lead author Goumiri (pictured at left) won the best presentation award in ML for space situational awareness.

October/November: Asilomar Conference on Signals, Systems, and Computers. This annual event focuses on novel work in theoretical and applied signal processing.

- Bayesian Multiagent Active Sensing and Localization via Decentralized Posterior Sampling – Braden Soper, Priyadip Ray, Hao Chen, Jose Cadena, Ryan Goldhahn

November/December: 36th Conference on Neural Information Processing Systems (NeurIPS). NeurIPS brings together researchers in ML, neuroscience, statistics, computer vision, natural language processing, and other fields.

- Analyzing Data-Centric Properties for Contrastive Learning on Graphs – Mark Heimann, Jayaraman Thiagarajan, and University of Michigan colleagues

- Single Model Uncertainty Estimation via Stochastic Data Centering – Thiagarajan, Rushil Anirudh, Peer-Timo Bremer, and an Arizona State University colleague

December: 14th Asian Conference on Machine Learning (ACML). ACML encourages significant and novel research in ML and adjacent domains.

- Domain Alignment Meets Fully Test-Time Adaptation – Thiagarajan and Arizona State University colleagues

- Out of Distribution Detection via Neural Network Anchoring (see also the code released on GitHub) – Anirudh, Thiagarajan

January: Winter Conference on Applications of Computer Vision (WACV). WACV is an international conference hosted by the IEEE Computer Society.

- Improving Diversity with Adversarially Learned Transformations for Domain Generalization – Anirudh, Thiagarajan, Bhavya Kailkhura, and Arizona State University colleagues

The UC Livermore Collaboration Center Is Open!

The three-building Hertz Hall complex on the east side of LLNL has a new look and a new name. Following an extensive nine-month renovation, the buildings now form the University of California Livermore Collaboration Center (UCLCC) and will serve as a UC multi-campus hub to expand collaborations and partnerships with three national labs. Upwards of 60 representatives from LLNL, UC, the NNSA, the city of Livermore, and the Livermore Lab Foundation, plus Los Alamos and Lawrence Berkeley national labs, attended a celebratory dedication on September 26.

The buildings in the UCLCC complex will retain their names as the Edward Teller Education Center, the Union building, and Hertz Hall. The buildings are owned by UC with a 20-year ground lease from NNSA. The UCLCC buildings include eight general offices, a general lab, a small conference room, two large classrooms, three medium classrooms, and two small classrooms. The arrangements for the facilities are flexible and can easily be reconfigured for various uses. The DSI and DSSI are working toward hosting events and programs at the new space, such as upcoming Data Science Challenges with UC students.

Resume Workshops for Data Science Challenge Students

The DSI hosted virtual resume workshops on September 14–15 for Data Science Challenge (DSC) students, advising them in how to craft a successful resume that showcases relevant experience and skills. Using real examples of LLNL job postings and submitted resumes, LLNL Computing workforce manager Marisol Gamboa explained how each section of a resume—and the order the sections appear in—can best communicate a student’s background and knowledge. She dispelled the notion that an undergraduate or high school student does not have enough experience for an impactful resume. For instance, group projects can demonstrate teamwork, and coursework or presentation topics may pertain directly to a job’s qualifications.

The workshops also included breakout sessions where LLNL mentors reviewed students’ resumes and offered one-on-one recommendations for improvement. “Hiring managers will decide within the first 30 seconds if they want to read the rest of a resume,” says Gamboa, who co-directed the DSSI in 2018–2020 and organized the first DSC session in 2019. “Many students aren’t aware of basic resume structure, and many don’t know how to articulate their technical skills.”

Led by LLNL computer scientist Brian Gallagher, the DSC is a summer program for students from UC Merced and UC Riverside. Students in the 2022 session worked on drug discovery problems, using ML and other tools to find small molecule inhibitors of SARS-CoV-2, the virus that causes COVID-19.

S&TR Cover Story: The ACES in Our Hand

Uranium enrichment is central to providing fuel to nuclear reactors, even those intended only for power generation. With minor modifications, however, this process can be altered to yield highly enriched uranium for use in nuclear weapons. The world’s need for nuclear fuel coexists with an ever-present danger—that a nonnuclear weapons nation-state possessing enrichment technology could produce weapons-grade fissile material to develop a nuclear arsenal or supply radiological materials to others.

The latest issue of Science & Technology Review describes the Adaptive Computing Environment and Simulations (ACES) project in a cover story. ACES will augment and modernize the Lab’s ability to serve its nuclear nonproliferation mission through three thrusts. First, its researchers will develop new computer models and simulations of fissile materials enrichment using the gas centrifuge-based method. “In nonproliferation work, analysts often integrate computational modeling into their assessments,” says Stefan Hau-Riege, associate division leader in Applied Physics and leader of the ACES project. “The idea is to provide them with a modern capability to model fuel enrichment integrating data science and ML.” In the second thrust, researchers will create a new computational infrastructure to support and sustain this modeling capability. Finally, ACES will recruit and develop a trained workforce to carry out nonproliferation work that requires a detailed understanding of centrifuge enrichment technology.

ESGF to Upgrade Climate Projection Data System

The Earth System Grid Federation (ESGF), a multi-agency initiative that gathers and distributes data for top-tier projections of the Earth’s climate, is preparing a series of upgrades that will make using the data easier and faster while improving how the information is curated. The federation, led by the DOE’s Oak Ridge National Laboratory in collaboration with Argonne and Lawrence Livermore national laboratories, is integral to some of the most important, impactful, and widely respected projections of the Earth’s future climate: those made by scientists working with the Coupled Model Intercomparison Projects for the World Climate Research Programme. In a new iteration of the ESGF project, computational scientists are working to improve data discovery, access, and storage. The work will rely on the latest software tools, cloud computing resources, the world’s most powerful supercomputers, and DOE’s Energy Sciences Network, or ESnet. ESnet currently enables 100 gigabit-per-second transfer rates among national laboratories and connections to national and international universities and research centers. An upgrade expected by the end of year will boost ESnet transfer rates to as much as 400 Gbps.

Cognitive Simulation and Stockpile Stewardship

What is the Lab’s stockpile stewardship mission? What types of scientific advancements have been made in service to this mission? And what role does cognitive simulation play in this context? In September, the Lab published a three-part article series that answers these questions (among many others!):

- Developing technology to keep the nuclear stockpile safe, secure, and reliable

- Scientific discovery for stockpile stewardship

- The people of stockpile stewardship are the key to LLNL’s success

LLNL’s cognitive simulation algorithms leverage AI and ML to create models that better reflect both theory and experimental results. “Cognitive simulation–based design exploration tools will help [researchers] accelerate development of technologies used in the nuclear stockpile. Manufacturing tools will help increase the speed and efficiency of manufacturing parts for the stockpile and reduce materials waste,” said Brian Spears, a National Ignition Facility physicist and leader of the Director’s Initiative in cognitive simulation.

Virtual Seminars Focus on Mixed-Integer Programming and ML Reproducibility

The DSI’s September 22 virtual seminar featured Dr. Ana Kenney, postdoctoral researcher in UC Berkeley’s Department of Statistics. Her talk, “Simultaneous Feature Selection and Outlier Detection Using Mixed-Integer Programming with Optimality Guarantees,” described a general framework for mixed-integer programming to simultaneously perform feature selection and outlier detections with provability optimal guarantees, as well as discussed the approach’s theoretical properties. Kenney works primarily at the interface of computational statistics/ML and optimization applied to biomedical sciences. She earned her PhD in Statistics and Operations Research from Penn State.

Sayash Kapoor, a PhD candidate at Princeton University’s Center for Information Technology Policy, gave the October 5 seminar. His talk, “Leakage and the Reproducibility Crisis in ML-Based Science,” examined results from an investigation of reproducibility issues in ML-based science, showing that data leakage is a widespread problem and has led to severe reproducibility failures. He also discussed fundamental methodological changes to ML-based science to catch cases of leakage before publication. Kapoor’s research critically investigates ML methods and their use in science and has been featured in WIRED and Nature among other media outlets.

Recordings of both seminars will be posted to the YouTube playlist, and the next seminar is scheduled for November 14.

LLNL Celebrates 70th Anniversary

On October 11, more than 5,000 LLNL employees gathered to celebrate the Lab’s 70th anniversary. Throughout the first-ever Employee Engagement Day, dozens of the Lab’s facilities and programs opened their doors for employees to get a close-up look at LLNL’s cutting-edge science and technology. It was a celebration of making the impossible possible, bringing a huge number of Lab colleagues onsite together at the same time for the first time in years. “My favorite part of today was walking around and meeting people,” said LLNL director Kim Budil. “I met thousands, probably, everywhere I went, which was great. It was a chance to really reconnect with what makes this place so great—the heartbeat of the Lab.” View the event Flickr album.

Meet an LLNL Data Scientist

According to Amanda Muyskens, the best thing about being a statistician is the opportunity to work on—and learn from—unique challenges. She joined the Lab in 2019 after earning a PhD in Statistics from North Carolina State University, and today her research includes Gaussian processes (GP), computationally efficient statistical methods, uncertainty quantification, and statistical consulting. Muyskens is the principal investigator for the MuyGPs project, which introduces a computationally efficient GP hyperparameter estimation method for large data (watch her DSI virtual seminar). Her team used MuyGPs methods to efficiently classify images of stars and galaxies, and they developed an open-source Python code called MuyGPyS for fast implementation of the GP algorithm. Muyskens credits the team dynamic for its success, noting, “We constantly teach each other from our disciplines and achieve things together that wouldn’t have been possible alone.” In 2022, DSSI students contributed to MuyGPs with parameter estimation optimization and an interactive visualization tool. “Students bring a new perspective to the work, and I’m inspired to see them tackle problems in ways that those of us entrenched in the applications may never have considered,” Muyskens says. Most recently, she assumed DSSI co-directorship and began a collaboration with Auburn University data science students.