March 29, 2022

Livermore WiDS Provides Forum for Women in Data Science

LLNL celebrated the 2022 global Women in Data Science (WiDS) conference on March 7 with its fifth annual regional event, featuring workshops, mentoring sessions, and a discussion with LLNL Director Kim Budil, the first woman to hold that role. The all-day event attracted women data scientists and students inside and outside the Lab, who gathered to share coding tips and swap stories of their experiences in growing their careers. Attendees tuned in to view presentations by LLNL women data scientists, engage in breakout sessions, and view a livestream of the global WiDS conference hosted by Stanford University.

Budil, who recently marked her one-year anniversary as director, kicked off the event with a fireside chat moderated by Jessie Gaylord, LLNL’s division leader for Global Security Computing Applications. Budil shared her thoughts on women’s empowerment, approach to leadership, and her path to becoming director, highlighting transparency, accessibility, and the power of networking. She called her directorship the “surprise and honor of a lifetime,” and discussed the challenges she faced along the way.

Budil called diversity, equity, and inclusion a “requirement for excellence” in the workplace and addressed the importance of data science at LLNL, specifically how ML and artificial intelligence are revolutionizing the Lab’s core mission areas, including high-energy-density physics, advanced manufacturing, and even human resources.

Videos of the talks are posted to the Livermore Lab Events YouTube channel. More information about the Lab’s history with WiDS can be found online.

New Research to Debut at ICLR

LLNL researchers have two papers accepted to the International Conference on Learning Representations (ICLR 2022) on April 25–29. ICLR is dedicated to the advancement of representation learning, in which models learn how to detect and classify features from raw data.

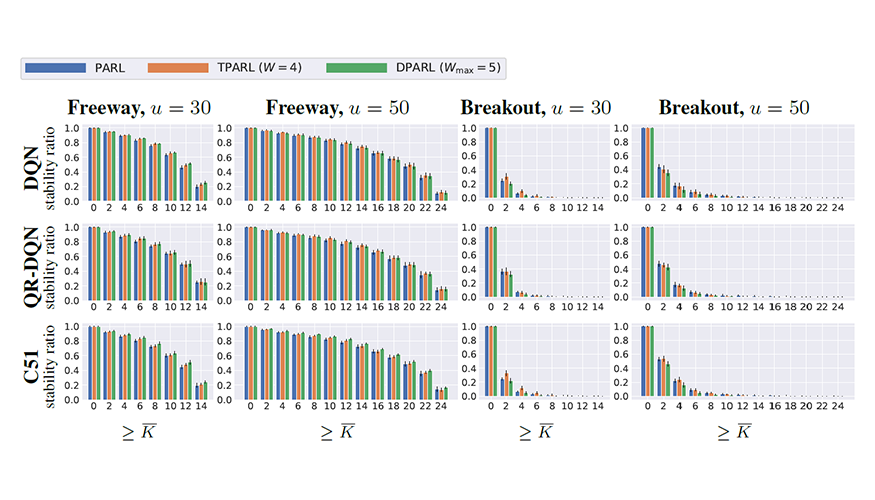

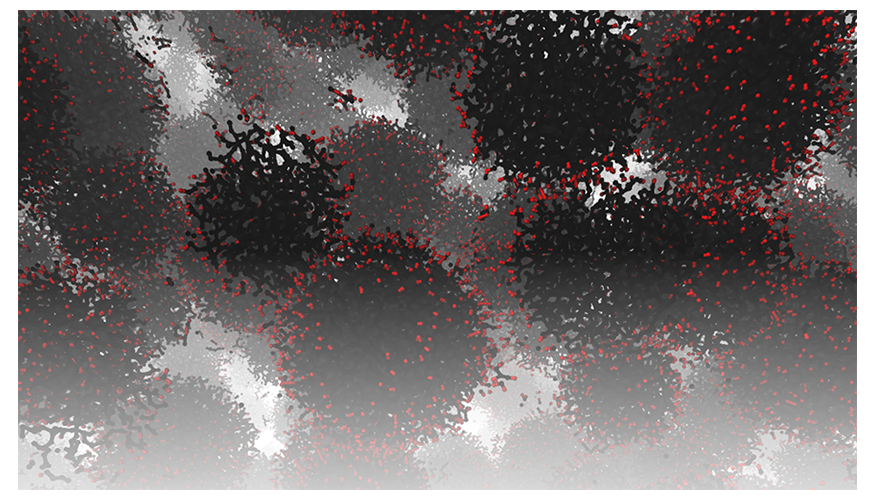

- COPA: Certifying Robust Policies for Offline Reinforcement Learning against Poisoning Attacks, which proposes a method for certifying the number of poisoning trajectories that can be tolerated regarding different certification criteria (preprint). Authors are Fan Wu, Linyi Li, Huan Zhang, Bhavya Kailkhura, Krishnaram Kenthapadi, Ding Zhao, and Bo Li. (Image at left shows robustness certification results for different reinforcement learning and certification methods.)

- On the Certified Robustness for Ensemble Models and Beyond, which proposes a lightweight Diversity Regularized Training method for ensemble models (preprint). Authors are Zhuolin Yang, Linyi Li, Xiaojun Xu, Bhavya Kailkhura, Tao Xie, and Bo Li.

“Consistent acceptance of our research work in top machine learning conferences such as ICLR shows that LLNL is at the forefront of ML robustness research. It is very rewarding to see that the impact of our work is being recognized both internally and externally,” said LLNL computer scientist Bhavya Kailkhura. Last year at ICLR 2021, Lab teams showcased work in deep neural networks and symbolic regression.

Director of DOE’s Artificial Intelligence and Technology Office visits LLNL

Pamela Isom, director of the Artificial Intelligence and Technology Office (AITO) for the U.S. Department of Energy, visited LLNL on March 2, where she learned about the Lab’s role in artificial intelligence and various initiatives.

During her visit, Isom toured the National Ignition Facility, where she was briefed on highlights of the inertial confinement fusion program. She also received a briefing and tour of the Lab’s High Performance Computing programs and she toured the Advanced Manufacturing Laboratory. Isom took part in a discussion with Lab scientists on climate and energy with a focus on climate resilience, adaptation and engineering the carbon economy, and completed her visit by receiving an overview on LLNL’s internships, university relations and community engagement.

Isom previously served as DOE’s deputy chief information officer for Architecture, Engineering, Technology and Innovation. She is a recipient of the 2021 Federal 100 Awards for her exceptional contributions in the way information technology is used to advance vital government missions. Isom is also a two-time recipient of the federal Gears of Government Award for her exceptional advancements in artificial intelligence and geospatial data science at DOE, and a recipient of the InnovateIT: Modernization Innovation Leader Award for excellence in federal government IT modernization leadership.

(Pictured, from left: Pat Falcone, deputy director for Science and Technology; Jim Brase, deputy associate director for Programs in the Computing Directorate; Pamela Isom, director of the Artificial Intelligence and Technology Office, DOE; Brian Spears, physicist; and Lab Director Kim Budil.)

Merlin Integrates Machine Learning into Scientific HPC Workflows

Machine learning (ML) is a crucial tool for analyzing large-scale ensemble data, but managing ML workflows in a high-performance computing (HPC) environment with heterogeneous architectures, parallel file systems, and batch scheduling is a daunting challenge. A “one size” solution doesn’t “fit all” types of complex scientific simulations.

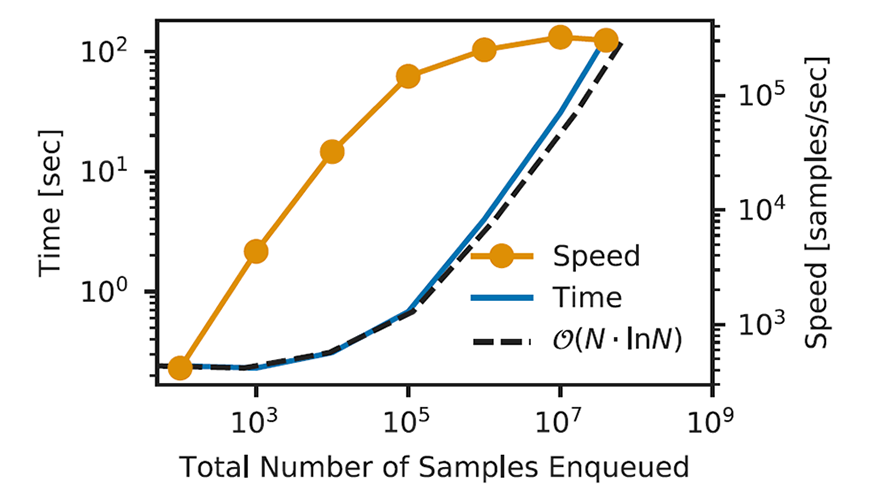

LLNL researchers have developed Merlin, an open-source workflow framework that enables large, ML-friendly ensembles of scientific simulations. Merlin features a flexible HPC-centric interface, low per-task overhead, multi-tiered fault recovery, and a hierarchical sampling algorithm that facilitates queuing of millions of tasks.

A paper in the June issue of Future Generation Computer Systems describes Merlin’s ML-integrated workflow system and the considerations driving its design, and the authors detail Merlin’s performance results on LLNL’s Pascal and Sierra supercomputers. For example, to demonstrate Merlin’s scalability, researchers created an unprecedentedly large fusion simulation dataset consisting of the multivariate results of approximately 100 million individual simulations on Sierra. Other case studies show Merlin’s flexibility in cascading and iterative scientific workflows.

“Merlin is a great example of what can happen when a diverse team comes together to solve an important problem—and has the supportive, inclusive, and trusting environment needed to take a risk. The project could not have proceeded without the partnerships we formed across LLNL, especially with Livermore Computing and the LDRD program,” states lead author Luc Peterson. “Ultimately, I think we’ve created a truly enabling technology.”

Peterson notes that when the COVID-19 pandemic hit, the team realized that the same workflow tool developed for National Ignition Facility fusion simulations could help researchers and analysts use large-scale ML-enhanced simulation studies to rapidly respond to urgent questions from national leaders about public health.

Going forward, Merlin has found a permanent home in LLNL’s Weapon Simulation and Computing program under the direction of Workflow Tools lead Charles Doutriaux. Peterson adds, “We are excited to work with Charles and his team to see how Merlin can continue to make a broad impact as part of a larger vision for next-generation simulation workflow systems.”

Along with Peterson, LLNL co-authors are Ben Bay, Joe Koning, Peter Robinson, Jessica Semler, Jeremy White, Rushil Anirudh, Kevin Athey, Peer-Timo Bremer, Francesco Di Natale, David Fox, Jim Gaffney, Sam Ade Jacobs, Bhavya Kailkhura, Bogdan Kustowski, Steven Langer, Brian Spears, Jay Thiagarajan, Brian Van Essen, and Jae-Seung Yeom. (Image at left shows the scalability of Merlin’s task enqueuing time [seconds] and speed [samples/second] with total number of samples.)

ML Model Finds COVID-19 Risks for Cancer Patients

Analyzing one of the largest databases of patients with cancer and COVID-19 with machine learning models, researchers from LLNL and the University of California, San Francisco, found previously unreported links between a rare type of cancer—as well as two cancer treatment-related drugs—and an increased risk of hospitalization from COVID-19. The findings appear in the journal Cancer Medicine.

Using a logistical regression approach, the team examined de-identified electronic health record data from the UC Health COVID Research Data Set on nearly a half-million patients who underwent COVID-19 testing at all 17 UC-affiliated hospitals. The dataset included nearly 50,000 patients with cancer—more than 17,000 of whom also had tested positive for COVID—and contained information on patient demographics, comorbidities, lab work, cancer types, and various cancer therapies.

The researchers examined a range of factors and disease outcomes, including hospitalization, ventilation, and death, and identified a higher risk from COVID-19 due to a specific group of rarer blood cancers and two medications used to treat cancer: venetoclax (used to treat leukemia) and methotrexate (an immune suppressant used in chemotherapy). The LLNL team includes principal investigator Priyadip Ray, Jose Cadena, Sam Nguyen, Kwan Ho Ryan Chan, Braden Soper, and Amy Gryshuk.

ML-Driven Atomistic Simulations Shed New Light on Nanocarbon Materials

Carbon exhibits a remarkable tendency to form nanomaterials with unusual physical and chemical properties, arising from its ability to engage in different bonding states. To better understand how carbon nanomaterials could be tailor-made and how their formation impacts shock phenomena such as detonation, LLNL scientists conducted ML-driven atomistic simulations to provide insight into the fundamental processes controlling the formation of nanocarbon materials, which could serve as a design tool, help guide experimental efforts, and enable more accurate energetic materials modeling.

The team found that liquid nanocarbon formation follows classical growth kinetics driven by Ostwald ripening (growth of large clusters at the expense of shrinking small ones) and obeys dynamical scaling in a process mediated by reactive carbon transport in the surrounding fluid. The team’s modeling effort comprised in-depth investigation of carbon condensation (precipitation) in oxygen-deficient carbon oxide mixtures at high pressures and temperatures, made possible by large-scale simulations using ML interatomic potentials. Authored by Rebecca Lindsay, Nir Goldman, Laurence Fried, and Sorin Bastea, a paper on this research appears in Nature Communications.

Combining Simulation and Experiment with AI

Brian Spears, director of LLNL’s new Artificial Intelligence Innovation Incubator (AI3), gave a virtual talk on February 28 titled “Cognitive Simulation: A Tour of AI Technologies and Strategies at LLNL.” His presentation was part of the Computing Directorate’s ongoing Comp 101 speaker series.

Spears explained how the Lab is using modern AI technologies to combine predictive simulation models with rich experimental data—a set of methods known as cognitive simulation. “This is a dynamic, vibrant, growing area at the Lab, and we’re working very hard to give it intentional direction and deliberate focus,” he said. This cognitive simulation work is aligned along three development thrusts: a tool for amplifying predictive models and computational platforms; a broad mission-driven strategy for the National Nuclear Security Administration; and a vision for modernizing scientific investigation, which brings together AI, HPC, and experiments.

As an example, Spears provided an overview of deep learning and autoencoding methods used in LLNL’s inertial confinement fusion research. Describing the AI methods and computational capabilities needed to handle the stringent requirements of the Lab’s scientific endeavors, he noted, “We see AI not as a tool we have to add to our missions, but as a tool that will grow naturally into all of the missions. The goal is to develop the technologies and strategy that make the Lab most effective at harnessing predictive techniques for transformational change.”

Virtual Seminar Explores Neural Modeling

Dr. Joshua Levine from the University of Arizona spoke at the DSI’s February 28 virtual seminar. His presentation, “Neural Representations for Volume Visualization,” described two projects. The first studies how generative neural models can be used to model the process of volume rendering scalar fields. The second is a recent neural modeling approach for building compressive representations of volume data. Levine also showed how this approach yields highly compact representations that outperform state-of-the-art volume compression approaches.

Prior to joining his university’s Department of Computer Science in 2016, Levine was an assistant professor at Clemson University and a postdoctoral research associate at the University of Utah’s SCI Institute. He is a recipient of the 2018 DOE Early Career award. He received his PhD in Computer Science from The Ohio State University in 2009. A recording of Levine’s talk will be posted to the seminar series’ YouTube playlist.

Meet an LLNL Data Scientist

The Lab’s biosecurity mission relies on multidisciplinary expertise in areas such as molecular biology, bioinformatics, HPC, and machine learning. LLNL bioinformatics researcher Jonathan Allen seeks to understand biological systems that impact human health and safety, in part by working with the Accelerating Therapeutics for Opportunities in Medicine (ATOM) consortium. “An exciting challenge is to synthesize small molecule compounds proposed by a computational model, physically test them, and revise the model based on the experimental feedback,” he explains. Recently, Allen helped expand LLNL’s partnership with ATOM to include Purdue University. The collaboration gave students an opportunity to apply data science techniques to the drug discovery process, searching for novel therapeutics for cancers and other diseases; future plans include evaluation of new COVID-19 compounds. As a mentor, Allen states, “I hope to contribute to a positive learning environment and encourage a healthy, socially thoughtful research community. Every person develops their skills and interests at their own pace and has the potential to do great things.” Allen joined LLNL in 2007 after earning a PhD in Computer Science and Bioinformatics from Johns Hopkins University.