July 27, 2021

Deep Learning for Materials Discovery

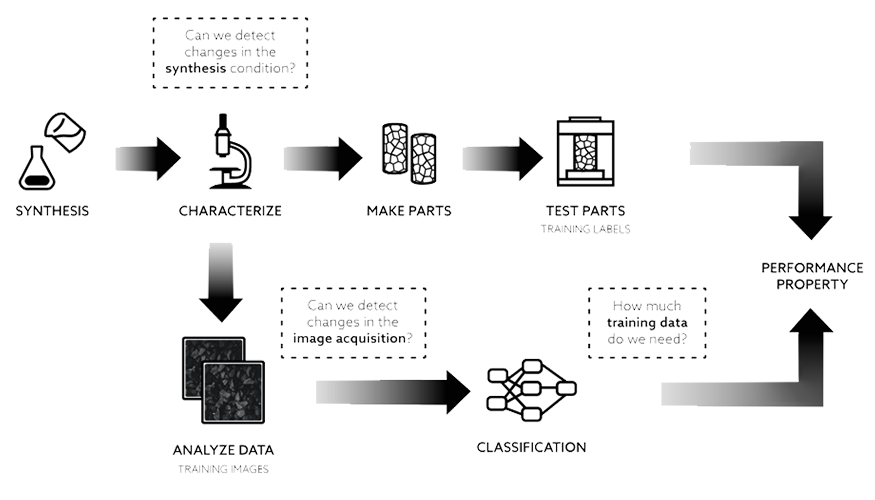

Deep Learning (DL) models are proving useful for a number of materials science applications including materials discovery, microstructure analysis, and property predictions. In a recent paper in ACS Omega, LLNL researchers propose a unified framework that leverages the predictive uncertainty from deep neural networks to answer challenging questions materials scientists usually encounter in machine learning (ML)–based material application workflows.

Specifically, the team demonstrates that predictive uncertainty from uncertainty-aware DL approaches (particularly deep ensembles) can be used to determine the number of required training data to achieve the desired prediction accuracy without relying on labelled data. Further, the team shows that the predictive uncertainty-guided decision referral is highly effective in detecting and refraining deep neural networks from making wrong predictions on confusing material samples and out-of-distribution samples that deviate from the training data. The newly proposed uncertainty-enabled decision-making method is quite generic and can be used in a wide range of scientific domains to ensure trust, dependability, and usefulness of DL models.

Optimized Viral Detection

LLNL biostatistician and DSSI co-director Nisha Mulakken has enhanced the Lawrence Livermore Microbial Detection Array (LLMDA) system with detection capability for all variants of SARS-CoV-2. The technology detects a broad range of organisms—viruses, bacteria, archaea, protozoa, and fungi—and has demonstrated novel species identification for human health, animal health, biodefense, and environmental sampling scenarios.

The LLMDA relies on high-performance computing and extensive data analysis to compare DNA sequences. Mulakken added 60-base pair probes to the LLMDA to target the SARS-CoV-2 genome, then ran multiple algorithms in parallel over the 41,450 SARS-CoV-2 reference sequences and compared those regions against genomes from all other known viruses. Given the pandemic’s urgency, Mulakken accomplished this optimization in about a month using LLNL’s Corona supercomputing cluster and funding from the CARES Act. She discussed the project on a recent video episode (runtime 14:17) of The Data Standard Podcast.

Virtual Seminar Explores Adaptive Variational Bayes

For the DSI’s June 16 virtual seminar, Dr. Lizhen Lin presented “Adaptive Contraction Rates and Model Selection Consistency of Variational Posteriors.” She proposed a novel variational Bayes framework called adaptive variational Bayes, which can operate on a collection of model spaces with varying structures. The proposed framework averages variational posteriors over individual models with certain weights to obtain the variational posterior over the entire model space. The University of Notre Dame’s Sara and Robert Lumpkings Associate Professor, Lin’s background includes Bayesian nonparametric, Bayesian asymptotic, statistics on manifolds, and geometric DL.

Research Highlights

- Reinforcement learning at ICML. An LLNL team has a paper (“Discovering Symbolic Policies with Deep Reinforcement Learning”) accepted to the 2021 International Conference on Machine Learning (ICML), one of the world’s premier conferences of its kind.

- Sharpening NIF shot predictions. In a Physics of Plasmas paper, researchers describe a new ML-based approach for modeling inertial confinement fusion experiments that results in more accurate predictions of National Ignition Facility (NIF) shots. ML models that combine simulation and experimental data are more accurate than the simulations alone, reducing prediction errors from as high as 110% to less than 7%.

- Laser-driven ion acceleration. Research conducted at LLNL is the first to apply neural networks to the study of high-intensity short-pulse laser-plasma acceleration, specifically for ion acceleration from solid targets. While in most instances of neural networks they are used primarily for studying datasets, in this work the team uses them to explore sparsely sampled parameter space as a surrogate for a full simulation or experiment. The research marks another Livermore publication in Physics of Plasmas.

- DL for audio restoration. Unsupervised DL methods for solving audio restoration problems extensively rely on carefully tailored neural architectures that carry strong inductive biases for defining priors in the time or spectral domain. Researchers propose a new U-Net based prior that does not impact either the network complexity or convergence behavior of existing convolutional architectures, yet leads to significantly improved restoration.

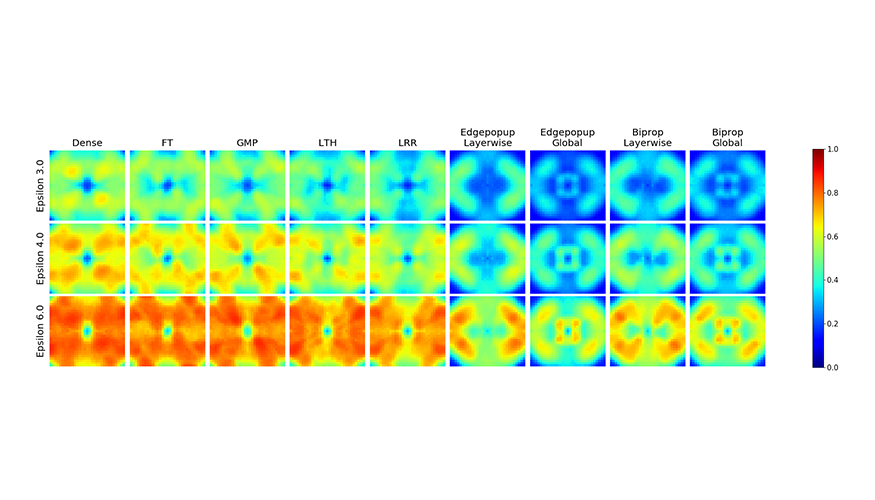

- A winning hand in ML. Successful adoption of DL depends on robustness to distributional shifts and model compactness for achieving efficiency. Efforts toward achieving extreme compactness without sacrificing out-of-distribution robustness and accuracy have mostly been unsuccessful in ML research. With a large-scale analysis of “lottery ticket-style” pruning, researchers show that data compression, if done properly, can dramatically improve robustness and accuracy. The LLNL team also created Compact, Accurate, and Robust Deep neural networks (CARDs) that outperform networks trained without compression.

Collaboration Possibilities at JAFOE

DSI council member Ana Kupresanin brought her expertise in statistics, probability, computer science, visualization, and exploratory data analysis to the invitation-only 2021 Japan-America Frontiers of Engineering (JAFOE) symposium. Held virtually this year on June 23–25, JAFOE brings together Japanese and American engineers from industry, universities, and other research institutions to introduce their areas of engineering research and technical work. The event facilitates an interdisciplinary transfer of knowledge and methodology that could eventually lead to collaborative networks of engineers from the two countries. As one of approximately 60 attendees, Kupresanin met several researchers whom the DSI will consider inviting to speak at upcoming virtual seminars.

Meet an LLNL Data Scientist

Throughout his career, Brian Gallagher has seen both himself and the work evolve. “I’m working on different things with different people, and I’m playing a very different role on my projects now than I was then,” he observes. For example, when Gallagher came to the Lab more than 15 years ago, data science was a nascent field, and its techniques were still quite new. He notes, “Data science has gone from a sort of fringe, esoteric research area to a mainstream technology being applied widely to programs across the Lab.”

Mentoring has always been important to Gallagher, who joined the Lab after completing his M.S. in

Computer Science at UMass Amherst. “I feel extremely grateful for the opportunities I’ve had in my

life and the people who have helped me along the way,” he states. After serving as a DSI Data Science Challenge mentor in 2020, Gallagher directs this year’s program for UC Merced and UC Riverside students. “My main goal for the Challenge is to provide an environment where everyone can grow,” he says. “You can see and feel the changes in people from day to day. That’s my favorite part of the experience.”

When he’s not working with students, Gallagher leads the Data Science and Analytics Group at LLNL’s Center for Applied Scientific Computing. He contributes to projects that leverage ML for nuclear threat-reduction applications, optimization of feedstock materials, and design of high-entropy alloys. “Because data science is so broadly applicable, I am constantly exposed to new application areas and new people from a variety of backgrounds,” Gallagher explains.

Tackling Asteroids with Deep Learning

The annual Data Science Challenge (DSC) with UC Merced was once again a stellar success. For three weeks, students were tasked with applying DL models to optical astronomy data to detect and identify near-earth objects such as asteroids. LLNL scientists served as mentors and gave presentations on using ML to identify galaxy blending, classifying stars and galaxies through Gaussian processes, astronomy background and fundamentals, finding microlensing black holes, and inner solar system asteroid stability.

“The experience has been incredibly fulfilling to mentor such incredible graduates and undergraduates. The graduate students really stepped up in making the experience fulfilling to the wide range of undergrads in both domain expertise and experience,” said DSC mentor (and former intern) Ryan Dana. Another DSC will be held with UC Riverside students beginning on August 30.

Industry Forum Coming Soon

LLNL’s first-ever Machine Learning for Industry Forum (ML4I) is almost here. The three-day virtual event begins on August 10 and is sponsored by the High Performance Computing Innovation Center and the DSI. Participants and attendees are expected from industry, research institutions, and academia. The schedule features daily keynote addresses from AI/ML leaders at Ford Motor Company, Covariant AI, and the Department of Energy's Artificial Intelligence Technology Office. Register by July 29.