Dec. 22, 2020

Spotlight: Research Team Recognized for COVID-19 Model

A machine learning model developed by a team of LLNL scientists to aid in COVID-19 drug discovery efforts was a finalist for the Gordon Bell Special Prize for High Performance Computing-Based COVID-19 Research. Using the Sierra supercomputer, the team created a more accurate and efficient generative model to enable COVID-19 researchers to produce novel compounds that could possibly treat the disease.

The team trained the model on an unprecedented 1.6 billion small molecule compounds and 1 million additional promising compounds for COVID-19, which reduced the model training time from 1 day to just 23 minutes. The team is Sam Ade Jacobs, Tim Moon, Kevin McLoughlin, Derek Jones, David Hysom, Dong Ahn, John Gyllenhaal, Pythagoras Watson, Felice Lightstone, Jonathan Allen, Ian Karlin, and Brian Van Essen.

Spotlight: Hardware Investments for AI Acceleration

LLNL is investing in computing hardware upgrades to accelerate AI research and applications. Cerebras Systems’ new product is integrated into the Lassen supercomputer. The wafer-sized computer chip is specifically designed for machine learning and AI applications. With this system, researchers are investigating novel approaches to predictive modeling for the NNSA. LLNL computer scientist Ian Karlin notes, “For many of our cognitive simulation workloads, we will run the machine learning parts on the Cerebras hardware, while the HPC simulation piece continues on the GPUs, improving time-to-solution.”

SambaNova's state-of-the-art AI accelerator is installed in the Corona supercomputing cluster, allowing researchers to more effectively combine AI and machine learning with complex scientific workloads and thus improve overall speed, performance, and productivity. “This integration enables low-latency communication between the two devices allowing them to operate in tandem with greater overall efficiency,” explains Karlin. “Scientific simulations running on Corona will feed data as they run into the SambaNova DataScale system to train new machine learning models based on their results.”

Mentoring the Next Generation

After a successful virtual session in 2020, the Data Science Summer Institute (DSSI) is already accepting applications through the end of January for the class of 2021. Director Goran Konjevod states, “While we are hoping for an end to the pandemic, our current plan is to run the DSSI as a virtual program again in 2021. Having gone through the experience once, we can better understand the drawbacks of the virtual setting and make improvements in an attempt to match the full onsite experience from previous years.”

In other mentoring news, the first-ever virtual Girls Who Code program—a full-scale collaboration between LLNL, the Livermore Lab Foundation, and the Livermore Valley Joint Unified School District—brought girls from several Bay Area Schools and LLNL mentors together for two hours after school each day during October 5–8 to learn about supercomputer hardware, parallel computing, and scientific application and visualization.

Recent Research

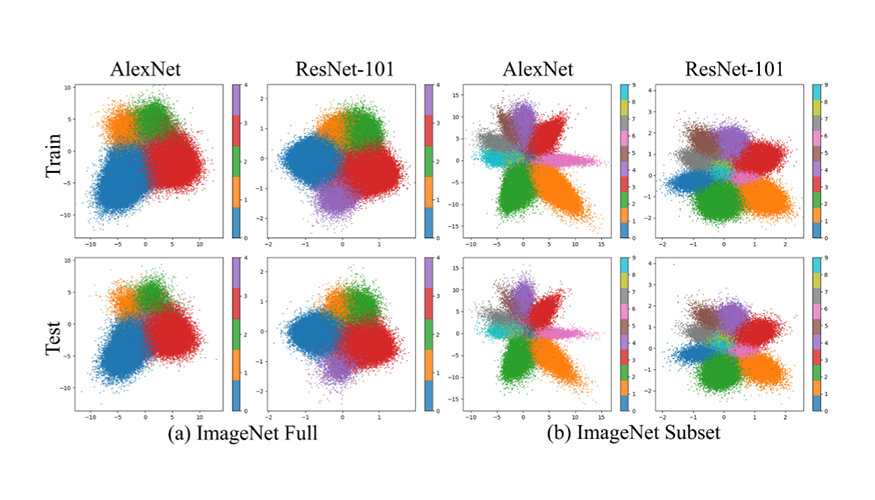

Uncovering interpretable relationships in high-dimensional scientific data through function preserving projections – Shusen Liu, Rushil Anirudh, Jayaraman Thiagarajan, Peer-Timo Bremer. This paper proposes function preserving projections (FPP) that construct 2D linear embeddings optimized to reveal interpretable yet potentially nonlinear patterns between the domain and the range of a high-dimensional function. Using FPP on real-world datasets, one can obtain fundamentally new insights about high-dimensional relationships in extremely large datasets that could not be processed with existing dimension reduction methods. The figure above compares projections of image feature representations from different neural network architectures.

Designing accurate emulators for scientific processes using calibration-driven deep models – Jayaraman Thiagarajan, Bindya Venkatesh, Rushil Anirudh, Peer-Timo Bremer, Jim Gaffney, Gemma Anderson, Brian Spears

Multi-frequency analysis of simulated versus observed variability in tropospheric temperatures – Giuliana Pallotta, Benjamin Santer

Processed functional connectomes for the HCP young adult: Data release and assessment on brain fingerprints – Uttara Tipnis, Kausar Abbas, Elizabeth Tran, Enrico Amico, Li Shen, Alan Kaplan, Joaquin Goni

COVID-19 research funded by the CARES Act includes projects conducted by LLNL scientists Rushil Anirudh, Jayaraman Thiagarajan, Peer-Timo Bremer, Timothy Germann, Sara Del Valle, and Frederick Streitz.

Virtual Seminar

In the DSI’s November seminar, Dr. Daniel Whiteson from UC Irvine presented “Learning Particle Physics from Machines.” His talk explained why new machine learning techniques are particularly well suited for particle physics, presented selected results that demonstrated their new capabilities, and introduced a strategy for translating these learned strategies into human understanding. Dr. Whiteson is co-host of the Daniel & Jorge Explain the Universe podcast and holds a PhD in Physics from UC Berkeley.

Supercomputing Conference

At SC20, LLNL researchers led dozens of tutorials and workshops (including four machine learning workshops); presented more than a dozen posters and papers; and contributed to panels, state-of-the-practice talks, and “birds of a feather” discussions. Topics ranged from memory-centric HPC, user support tools, and software correctness to machine learning, cloud workflows, and programming and performance visualization tools. The conference also recognized LLNL as having more supercomputers in the Top500 list, including 4 in the top 100—more than any other institution.