July 15, 2020

Spotlight: Mentoring the Next Generation

For the second year in a row, the DSI teamed up with the University of California at Merced to offer a two-week Data Science Challenge at the beginning of June. The intensive program provided mentors, assignments, virtual tours, and seminars. Under the direction of LLNL’s Marisol Gamboa and UC Merced’s Suzanne Sindi, 21 students worked from their homes through video conferencing and chat programs to develop machine learning (ML) models capable of differentiating potentially explosive materials from other types of molecules.

The UC Merced students were divided into five teams, each led by a PhD candidate, and presented their ML approaches and results on the final day of the Challenge. Each team developed a classifier for potential explosive materials given only their molecular structures. The algorithms were trained on a dataset of 400 known explosive compounds and about 5,000 pharmaceutical drug compounds.

The 12-week Data Science Summer Institute (DSSI) program is officially under way with 27 students from all over the U.S. and 2 PhD students from Japan’s Science and Technology Collaboration. In a related Carnegie Live video, Seiichi Shimasaki, Science Counselor for the Japanese embassy in the U.S., described a multiyear science research program (nicknamed the “Moonshot”) to develop new technologies that help solve some of society’s most pressing challenges. He explained that the Government of Japan was looking for a data science program to mentor young scientists, which led to the students’ participation in this year’s DSSI session. He referred to the DSSI as “a unique and prominent program,” noting the importance of collaboration with U.S. national laboratories’ expertise. Additionally, Patricia Falcone, LLNL’s Deputy Director for Science and Technology, spoke about strategic partnerships between international organizations with “shared values that are allied and purposeful.”

Spotlight: Two ICML Acceptances

The International Conference on Machine Learning, better known as ICML, is recognized as one of the world’s top ML conferences. Acceptance of research findings at ICML is a key differentiator for both scientists and their affiliated organizations, demonstrating high-impact leadership in advancing the fields of ML and artificial intelligence (AI). LLNL has two paper acceptances at ICML20, which will be held virtually this year during July 12–18.

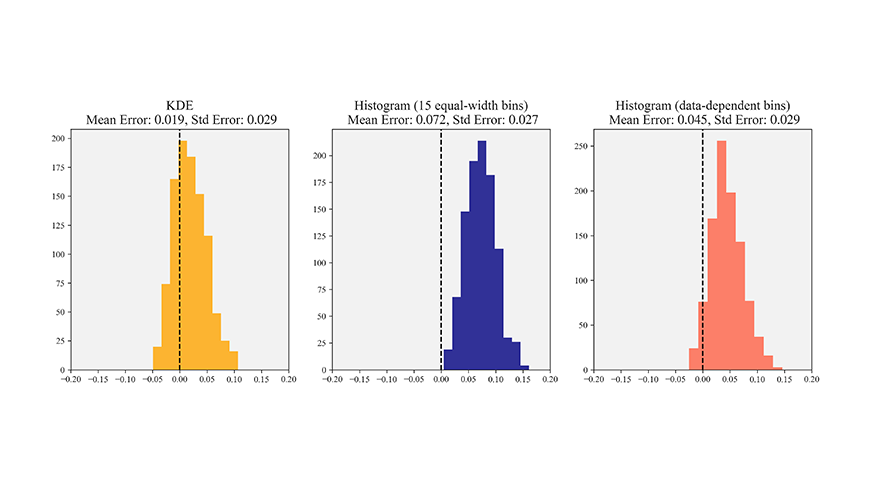

- Mix-n-match: ensemble and compositional methods for uncertainty calibration in deep learning – Jize Zhang, Bhavya Kailkhura, and Yong Han

- Adversarial mutual information for text generation – Bhavya Kailkhura with co-authors from four organizations

Recent Research

Surrogate models supported by neural networks can perform as well, and in some ways better, than computationally expensive simulators and could lead to new insights in complicated physics problems such as inertial confinement fusion (ICF). In a paper published by the Proceedings of the National Academy of Sciences, LLNL researchers describe the development of a deep learning-driven surrogate model incorporating a multimodal neural network capable of quickly and accurately emulating complex scientific processes, including the high-energy density physics involved in ICF.

LLNL scientists and engineers have made significant progress in development of a 3D “brain-on-a-chip” device capable of recording neural activity of human brain cell cultures grown outside the body. The researchers have developed a way to computationally model the activity and structures of neuronal communities as they grow and mature on the device over time, a development that could aid scientists in finding countermeasures to toxins or disorders affecting the brain, such as epilepsy or traumatic brain injury. The findings were published in PLOS Computational Biology.

Reduced order models have been successfully developed for nonlinear dynamical systems. To achieve a considerable speed-up, a hyper-reduction step is needed to reduce the computational complexity due to nonlinear terms. An LLNL-led team has developed the SNS method, which provides a more efficient strategy than traditional model order reduction techniques. The work appears in the SIAM Journal on Scientific Computing.

Physicist Brian Spears discussed “AI Hardware for Future HPC Systems” on The Next Platform podcast. Spears, who leads LLNL’s cognitive simulation initiative, discussed how to evaluate AI processors and how those will mesh with HPC systems like the Lab’s Lassen supercomputer and future procurements.

Virtual Seminar

The DSI Seminar Series continued in June with a virtual seminar—“Dungeons and Discourse: Using Computational Storytelling to Look at Natural Language Use”—presented by Lara Martin, a Human-Centered Computing PhD Candidate at Georgia Tech. Her research looks at various techniques of automated story generation, focusing on the perceived creativity of the generated stories.

Automated story generation is an area of AI research that aims to create agents that tell “good” stories. As Martin explained, previous story-generation systems use planning to create new stories, but these systems require a vast amount of knowledge engineering. She defined a creative product as one that is both novel and useful and showed how a jointly probabilistic and causal model can provide more creative stories for readers of stories generated from an improvisational storytelling system than from solely probabilistic or causal models.

The July seminar will feature Abel Rodriguez from UC Santa Cruz, who will speak about ethical considerations in the era of big data.

COVID-19 Resources

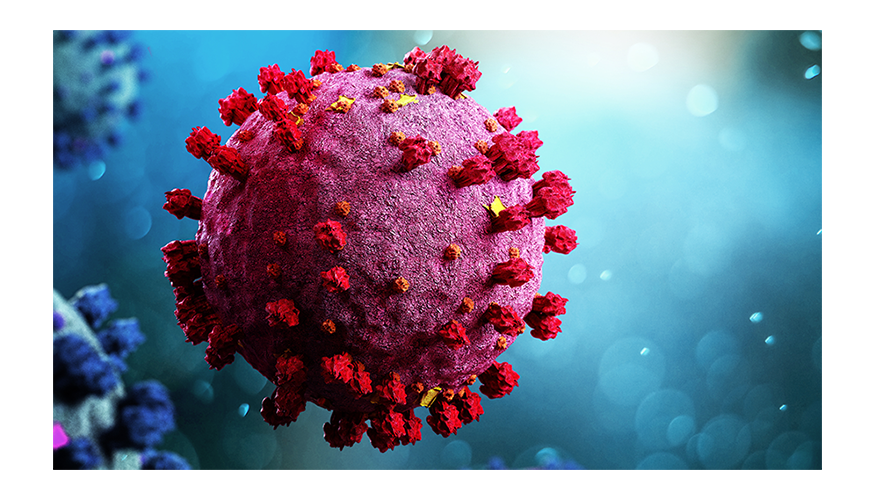

- A searchable data portal shares COVID-19 research with scientists worldwide and the general public.

- An ongoing AI-driven computational design platform optimizes binding of antibodies to the COVID-19 virus.

- LLNL has released a video explaining how supercomputing can fight the COVID-19 pandemic.

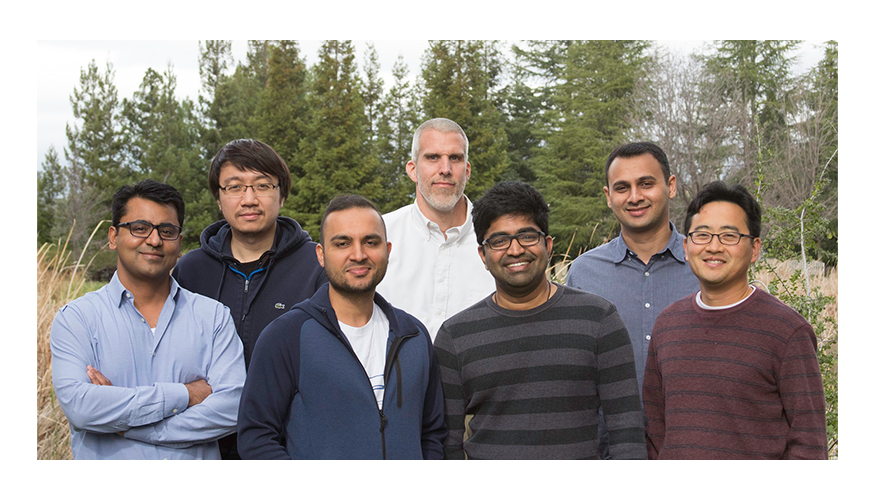

Meet a Research Team

One of the Lab’s strengths is enabling domain scientists to work alongside data-centric researchers and other experts. Those seeking help with ML models or high-dimensional data visualizations need look no further than a certain cluster of offices within LLNL’s Center for Applied Scientific Computing. Pursuing both real-world applications and theoretical foundations within data science disciplines, these experts are, from left: Harsh Bhatia, Shusen Liu, Bhavya Kailkhura, Peer-Timo Bremer, Jayaraman Thiagarajan, Rushil Anirudh, and Hyojin Kim.