Did you know we have a monthly newsletter? View past volumes and subscribe.

Machine learning tool fills in the blanks for satellite light curves

Feb. 13, 2024 -

When viewed from Earth, objects in space are seen at a specific brightness, called apparent magnitude. Over time, ground-based telescopes can track a specific object’s change in brightness. This time-dependent magnitude variation is known as an object’s light curve, and can allow astronomers to infer the object’s size, shape, material, location, and more. Monitoring the light curve of...

LLNL’s Kailkhura elevated to IEEE senior member

Nov. 8, 2023 -

IEEE, the world’s largest technical professional organization, has elevated LLNL research staff member Bhavya Kailkhura to the grade of senior member within the organization. IEEE has more than 427,000 members in more than 190 countries, including engineers, scientists and allied professionals in the electrical and computer sciences, engineering and related disciplines. Just 10% of IEEE’s...

Explainable artificial intelligence can enhance scientific workflows

July 25, 2023 -

As ML and AI tools become more widespread, a team of researchers in LLNL’s Computing and Physical and Life Sciences directorates are trying to provide a reasonable starting place for scientists who want to apply ML/AI, but don’t have the appropriate background. The team’s work grew out of a Laboratory Directed Research and Development project on feedstock materials optimization, which led to...

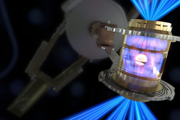

High-performance computing, AI and cognitive simulation helped LLNL conquer fusion ignition

June 21, 2023 -

For hundreds of LLNL scientists on the design, experimental, and modeling and simulation teams behind inertial confinement fusion (ICF) experiments at the National Ignition Facility, the results of the now-famous Dec. 5, 2022, ignition shot didn’t come as a complete surprise. The “crystal ball” that gave them increased pre-shot confidence in a breakthrough involved a combination of detailed...

Visionary report unveils ambitious roadmap to harness the power of AI in scientific discovery

June 12, 2023 -

A new report, the product of a series of workshops held in 2022 under the guidance of the U.S. Department of Energy’s Office of Science and the National Nuclear Security Administration, lays out a comprehensive vision for the Office of Science and NNSA to expand their work in scientific use of AI by building on existing strengths in world-leading high performance computing systems and data...

Consulting service infuses Lab projects with data science expertise

June 5, 2023 -

A key advantage of LLNL’s culture of multidisciplinary teamwork is that domain scientists don’t need to be experts in everything. Physicists, chemists, biologists, materials engineers, climate scientists, computer scientists, and other researchers regularly work alongside specialists in other fields to tackle challenging problems. The rise of Big Data across the Lab has led to a demand for...

Data science meets fusion (VIDEO)

May 30, 2023 -

LLNL’s historic fusion ignition achievement on December 5, 2022, was the first experiment to ever achieve net energy gain from nuclear fusion. However, the experiment’s result was not actually that surprising. A team leveraging data science techniques developed and used a landmark system for teaching artificial intelligence (AI) to incorporate and better account for different variables and...

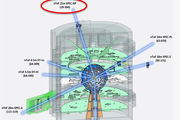

LLNL and SambaNova Systems announce additional AI hardware to support Lab’s cognitive simulation efforts

May 23, 2023 -

LLNL and SambaNova Systems have announced the addition of a spatial data flow accelerator into the Livermore Computing Center, part of an effort to upgrade the Lab’s CogSim program. LLNL will integrate the new hardware to further investigate CogSim approaches combining AI with high-performance computing—and how deep neural network hardware architectures can accelerate traditional physics...

Cognitive simulation supercharges scientific research

Jan. 10, 2023 -

Computer modeling has been essential to scientific research for more than half a century—since the advent of computers sufficiently powerful to handle modeling’s computational load. Models simulate natural phenomena to aid scientists in understanding their underlying principles. Yet, while the most complex models running on supercomputers may contain millions of lines of code and generate...

Supercomputing’s critical role in the fusion ignition breakthrough

Dec. 21, 2022 -

On December 5th, the research team at LLNL's National Ignition Facility (NIF) achieved a historic win in energy science: for the first time ever, more energy was produced by an artificial fusion reaction than was consumed—3.15 megajoules produced versus 2.05 megajoules in laser energy to cause the reaction. High-performance computing was key to this breakthrough (called ignition), and HPCwire...

LLNL staff returns to Texas-sized Supercomputing Conference

Nov. 23, 2022 -

The 2022 International Conference for High Performance Computing, Networking, Storage, and Analysis (SC22) returned to Dallas as a large contingent of LLNL staff participated in sessions, panels, paper presentations, and workshops centered around HPC. The world’s largest conference of its kind celebrated its highest in-person attendance since the start of the COVID-19 pandemic, with about 11...

LLNL researchers win HPCwire award for applying cognitive simulation to ICF

Nov. 17, 2022 -

The high performance computing publication HPCwire announced LLNL as the winner of its Editor’s Choice award for Best Use of HPC in Energy for applying cognitive simulation (CogSim) methods to inertial confinement fusion (ICF) research. The award was presented at the largest supercomputing conference in the world: the 2022 International Conference for High Performance Computing, Networking...

Understanding the universe with applied statistics (VIDEO)

Nov. 17, 2022 -

In a new video posted to the Lab’s YouTube channel, statistician Amanda Muyskens describes MuyGPs, her team’s innovative and computationally efficient Gaussian Process hyperparameter estimation method for large data. The method has been applied to space-based image classification and released for open-source use in the Python package MuyGPyS. MuyGPs will help astronomers and astrophysicists...

Scientific discovery for stockpile stewardship

Sept. 27, 2022 -

Among the significant scientific discoveries that have helped ensure the reliability of the nation’s nuclear stockpile is the advancement of cognitive simulation. In cognitive simulation, researchers are developing AI/ML algorithms and software to retrain part of this model on the experimental data itself. The result is a model that “knows the best of both worlds,” says Brian Spears, a...

Assured and robust…or bust

June 30, 2022 -

The consequences of a machine learning (ML) error that presents irrelevant advertisements to a group of social media users may seem relatively minor. However, this opacity, combined with the fact that ML systems are nascent and imperfect, makes trusting their accuracy difficult in mission-critical situations, such as recognizing life-or-death risks to military personnel or advancing materials...

CASC team wins best paper at visualization symposium

May 25, 2022 -

A research team from LLNL’s Center for Applied Scientific Computing won Best Paper at the 15th IEEE Pacific Visualization Symposium (PacificVis), which was held virtually on April 11–14. Computer scientists Harsh Bhatia, Peer-Timo Bremer, and Peter Lindstrom collaborated with University of Utah colleagues Duong Hoang, Nate Morrical, and Valerio Pascucci on “AMM: Adaptive Multilinear Meshes.”...

Unprecedented multiscale model of protein behavior linked to cancer-causing mutations

Jan. 10, 2022 -

LLNL researchers and a multi-institutional team have developed a highly detailed, machine learning–backed multiscale model revealing the importance of lipids to the signaling dynamics of RAS, a family of proteins whose mutations are linked to numerous cancers. Published by the Proceedings of the National Academy of Sciences, the paper details the methodology behind the Multiscale Machine...

LLNL establishes AI Innovation Incubator to advance artificial intelligence for applied science

Dec. 20, 2021 -

LLNL has established the AI Innovation Incubator (AI3), a collaborative hub aimed at uniting experts in artificial intelligence (AI) from LLNL, industry and academia to advance AI for large-scale scientific and commercial applications. LLNL has entered into a new memoranda of understanding with Google, IBM and NVIDIA, with plans to use the incubator to facilitate discussions and form future...

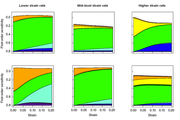

Building confidence in materials modeling using statistics

Oct. 31, 2021 -

LLNL statisticians, computational modelers, and materials scientists have been developing a statistical framework for researchers to better assess the relationship between model uncertainties and experimental data. The Livermore-developed statistical framework is intended to assess sources of uncertainty in strength model input, recommend new experiments to reduce those sources of uncertainty...

Summer scholar develops data-driven approaches to key NIF diagnostics

Oct. 20, 2021 -

Su-Ann Chong's summer project, “A Data-Driven Approach Towards NIF Neutron Time-of-Flight Diagnostics Using Machine Learning and Bayesian Inference,” is aimed at presenting a different take on nToF diagnostics. Neutron time-of-flight diagnostics are an essential tool to diagnose the implosion dynamics of inertial confinement fusion experiments at NIF, the world’s largest and most energetic...