Did you know we have a monthly newsletter? View past volumes and subscribe.

Igniting scientific discovery with AI and supercomputing (VIDEO)

April 15, 2024 -

LLNL’s fusion ignition breakthrough, more than 60 years in the making, was enabled by a combination of traditional fusion target design methods, high-performance computing (HPC), and AI techniques. The success of ignition marks a significant milestone in fusion energy research, and was facilitated in part by the precision simulations and rapid experimental data analysis only possible through...

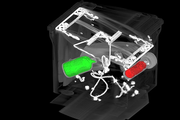

For better CT images, new deep learning tool helps fill in the blanks

Nov. 17, 2023 -

At a hospital, an airport, or even an assembly line, computed tomography (CT) allows us to investigate the otherwise inaccessible interiors of objects without laying a finger on them. To perform CT, x-rays first shine through an object, interacting with the different materials and structures inside. Then, the x-rays emerge on the other side, casting a projection of their interactions onto a...

Lab partners with new Space Force Lab

Nov. 14, 2023 -

LLNL subject matter experts have been selected by the U.S. Space Force to help stand up its newest Tools, Applications, and Processing (TAP) laboratory dedicated to advancing military space domain awareness (SDA). The Livermore team attended the October 26 kickoff in Colorado Springs of the SDA TAP lab’s Project Apollo technology accelerator, designed with an open framework to support and...

LLNL’s Kailkhura elevated to IEEE senior member

Nov. 8, 2023 -

IEEE, the world’s largest technical professional organization, has elevated LLNL research staff member Bhavya Kailkhura to the grade of senior member within the organization. IEEE has more than 427,000 members in more than 190 countries, including engineers, scientists and allied professionals in the electrical and computer sciences, engineering and related disciplines. Just 10% of IEEE’s...

LLNL, University of California partner for AI-driven additive manufacturing research

Sept. 27, 2023 -

Grace Gu, a faculty member in mechanical engineering at UC Berkeley, has been selected as the inaugural recipient of the LLNL Early Career UC Faculty Initiative. The initiative is a joint endeavor between LLNL’s Strategic Deterrence Principal Directorate and UC national laboratories at the University of California Office of the President, seeking to foster long-term academic partnerships and...

Explainable artificial intelligence can enhance scientific workflows

July 25, 2023 -

As ML and AI tools become more widespread, a team of researchers in LLNL’s Computing and Physical and Life Sciences directorates are trying to provide a reasonable starting place for scientists who want to apply ML/AI, but don’t have the appropriate background. The team’s work grew out of a Laboratory Directed Research and Development project on feedstock materials optimization, which led to...

High-performance computing, AI and cognitive simulation helped LLNL conquer fusion ignition

June 21, 2023 -

For hundreds of LLNL scientists on the design, experimental, and modeling and simulation teams behind inertial confinement fusion (ICF) experiments at the National Ignition Facility, the results of the now-famous Dec. 5, 2022, ignition shot didn’t come as a complete surprise. The “crystal ball” that gave them increased pre-shot confidence in a breakthrough involved a combination of detailed...

Visionary report unveils ambitious roadmap to harness the power of AI in scientific discovery

June 12, 2023 -

A new report, the product of a series of workshops held in 2022 under the guidance of the U.S. Department of Energy’s Office of Science and the National Nuclear Security Administration, lays out a comprehensive vision for the Office of Science and NNSA to expand their work in scientific use of AI by building on existing strengths in world-leading high performance computing systems and data...

Data science meets fusion (VIDEO)

May 30, 2023 -

LLNL’s historic fusion ignition achievement on December 5, 2022, was the first experiment to ever achieve net energy gain from nuclear fusion. However, the experiment’s result was not actually that surprising. A team leveraging data science techniques developed and used a landmark system for teaching artificial intelligence (AI) to incorporate and better account for different variables and...

LLNL and SambaNova Systems announce additional AI hardware to support Lab’s cognitive simulation efforts

May 23, 2023 -

LLNL and SambaNova Systems have announced the addition of a spatial data flow accelerator into the Livermore Computing Center, part of an effort to upgrade the Lab’s CogSim program. LLNL will integrate the new hardware to further investigate CogSim approaches combining AI with high-performance computing—and how deep neural network hardware architectures can accelerate traditional physics...

Patent applies machine learning to industrial control systems

May 8, 2023 -

An industrial control system (ICS) is an automated network of devices that make up a complex industrial process. For example, a large-scale electrical grid may contain thousands of instruments, sensors, and controls that transfer and distribute power, along with computing systems that capture data transmitted across these devices. Monitoring the ICS network for new device connections, device...

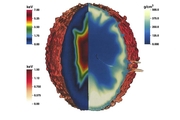

Computing codes, simulations helped make ignition possible

April 6, 2023 -

Harkening back to the genesis of LLNL’s inertial confinement fusion (ICF) program, codes have played an essential role in simulating the complex physical processes that take place in an ICF target and the facets of each experiment that must be nearly perfect. Many of these processes are too complicated, expensive, or even impossible to predict through experiments alone. With only a few...

Skywing: Open-source software aids collaborative autonomy applications

Jan. 25, 2023 -

A new software developed at LLNL, and known as Skywing, provides domain scientists working to protect the nation’s critical infrastructure with a high-reliability, real-time software platform for collaborative autonomy applications. The U.S. modern critical infrastructure—from the electrical grid that sends power to homes to the pipelines that deliver water and natural gas and the railways...

LLNL, University of California initiative fosters academic partnership

Jan. 19, 2023 -

A new joint initiative between the LLNL Weapons and Complex Integration (WCI) Directorate and the University of California (UC) is aimed at developing next generation academic leadership with strong and enduring national laboratory connections. The LLNL Early Career UC Faculty Initiative is accepting proposals from untenured, tenure-track faculty at one of 10 UC campuses, soliciting...

Cognitive simulation supercharges scientific research

Jan. 10, 2023 -

Computer modeling has been essential to scientific research for more than half a century—since the advent of computers sufficiently powerful to handle modeling’s computational load. Models simulate natural phenomena to aid scientists in understanding their underlying principles. Yet, while the most complex models running on supercomputers may contain millions of lines of code and generate...

Supercomputing’s critical role in the fusion ignition breakthrough

Dec. 21, 2022 -

On December 5th, the research team at LLNL's National Ignition Facility (NIF) achieved a historic win in energy science: for the first time ever, more energy was produced by an artificial fusion reaction than was consumed—3.15 megajoules produced versus 2.05 megajoules in laser energy to cause the reaction. High-performance computing was key to this breakthrough (called ignition), and HPCwire...

National Ignition Facility achieves fusion ignition

Dec. 13, 2022 -

The U.S. Department of Energy (DOE) and DOE’s National Nuclear Security Administration (NNSA) today announced the achievement of fusion ignition at LLNL—a major scientific breakthrough decades in the making that will pave the way for advancements in national defense and the future of clean power. On Dec. 5, a team at LLNL’s National Ignition Facility (NIF) conducted the first controlled...

LLNL staff returns to Texas-sized Supercomputing Conference

Nov. 23, 2022 -

The 2022 International Conference for High Performance Computing, Networking, Storage, and Analysis (SC22) returned to Dallas as a large contingent of LLNL staff participated in sessions, panels, paper presentations, and workshops centered around HPC. The world’s largest conference of its kind celebrated its highest in-person attendance since the start of the COVID-19 pandemic, with about 11...

LLNL researchers win HPCwire award for applying cognitive simulation to ICF

Nov. 17, 2022 -

The high performance computing publication HPCwire announced LLNL as the winner of its Editor’s Choice award for Best Use of HPC in Energy for applying cognitive simulation (CogSim) methods to inertial confinement fusion (ICF) research. The award was presented at the largest supercomputing conference in the world: the 2022 International Conference for High Performance Computing, Networking...

Scientific discovery for stockpile stewardship

Sept. 27, 2022 -

Among the significant scientific discoveries that have helped ensure the reliability of the nation’s nuclear stockpile is the advancement of cognitive simulation. In cognitive simulation, researchers are developing AI/ML algorithms and software to retrain part of this model on the experimental data itself. The result is a model that “knows the best of both worlds,” says Brian Spears, a...